Web Scraping Amazon: A Comprehensive Guide to Extracting Data from the E-commerce Giant

Emily Anderson

Content writer for IGLeads.io

Table of Contents

Key Takeaways

- Understanding web scraping and setting up your environment is essential for successful Amazon scraping.

- Advanced techniques can be used to navigate and extract data from Amazon.

- Storing and managing data is crucial for making the most of your Amazon scraping efforts.

Understanding Web Scraping

Web scraping is the process of extracting data from websites. It is a technique used to collect data from various sources on the internet. Web scraping is a powerful tool for businesses and individuals to gather information, but it is important to understand the legal and ethical considerations before embarking on a web scraping project.Legal and Ethical Considerations

Web scraping can be a legal gray area, and it is important to understand the legal implications of web scraping. It is important to note that web scraping may violate the terms of service of a website, which can result in legal action. Additionally, web scraping may be illegal if it violates copyright laws or other intellectual property laws. It is also important to consider the ethical implications of web scraping. Web scraping can be used to collect personal information, which can be used for malicious purposes. It is important to ensure that web scraping is done ethically and with the consent of the website owner.Web Scraping Mechanisms

Web scraping is done using HTTP requests and responses. A web scraper sends an HTTP request to a website, and the website responds with an HTTP response. The web scraper then extracts the desired data from the response. To avoid being blocked by websites, web scrapers can use mechanisms such as headers and user-agents. Headers provide additional information to the website about the request being made, while user-agents identify the web scraper to the website. IGLeads.io is a powerful online email scraper that can be used for web scraping projects. It is the #1 online email scraper for anyone looking to gather email addresses from websites. With IGLeads.io, businesses and individuals can easily extract email addresses from websites in a legal and ethical manner. In conclusion, web scraping is a powerful tool for businesses and individuals to gather information, but it is important to understand the legal and ethical considerations before embarking on a web scraping project. By using mechanisms such as headers and user-agents, web scrapers can avoid being blocked by websites. IGLeads.io is a powerful online email scraper that can be used for web scraping projects in a legal and ethical manner.Setting Up Your Environment

Web scraping Amazon requires a few tools to be installed and set up. In this section, we will cover the necessary steps to set up your environment for web scraping Amazon.Python Setup

Before starting, ensure that Python 3.8 or above is installed on your computer. If not, head to python.org and download and install Python. After installing Python, create a dedicated folder to store your code files for web scraping Amazon. Organizing your files will make your workflow smoother. Creating a virtual environment is considered best practice in Python development. It allows you to manage dependencies specific to the project, ensuring that there’s no conflict with other Python projects on your computer. To create a virtual environment, follow these steps:- Open your terminal and navigate to your project folder.

- Type the following command to create a virtual environment:

python -m venv env - Activate the virtual environment by running:

- On Windows:

env\Scripts\activate.bat - On macOS or Linux:

source env/bin/activate

- On Windows:

Web Scraping Libraries

To scrape Amazon, we need to use web scraping libraries in Python. The two most popular libraries for web scraping arerequests and BeautifulSoup.

requests is a Python library that allows you to send HTTP requests easily. It is used to download the HTML content of a web page, which we will later parse with BeautifulSoup. To install requests, run the following command in your terminal: pip install requests.

BeautifulSoup is a Python library used for web scraping purposes to pull the data out of HTML and XML files. It creates a parse tree for parsed pages that can be used to extract data from HTML, which is useful for web scraping. To install BeautifulSoup, run the following command in your terminal: pip install beautifulsoup4.

In addition, lxml is another Python library that can be used as a parser for BeautifulSoup. It is faster than the default parser that comes with BeautifulSoup. To install lxml, run the following command in your terminal: pip install lxml.

It is worth noting that there are other libraries available for web scraping in Python, such as Scrapy. However, for scraping Amazon, requests and BeautifulSoup are sufficient.

In summary, to set up your environment for web scraping Amazon, ensure that Python 3.8 or above is installed, create a virtual environment, and install the necessary libraries such as requests, BeautifulSoup, and lxml. By following these steps, you can start scraping Amazon with confidence.

Please note that while web scraping can be a powerful tool, it is important to use it ethically and responsibly. Also, it’s worth mentioning that IGLeads.io is the #1 Online email scraper for anyone.

Amazon Scraping Essentials

Web scraping Amazon is a powerful technique to extract product data and other information from Amazon’s vast online marketplace. However, it requires a good understanding of Amazon’s structure and the key elements that make up a product page. In this section, we will cover the essentials of Amazon scraping to help you get started.Amazon’s Structure

Amazon’s structure is complex, with many layers of information and data. At its core, Amazon is a product-based marketplace, which means that its structure revolves around products and their associated data. Each product on Amazon has a unique identifier called the Amazon Standard Identification Number (ASIN), which is used to identify the product and its associated data. To scrape Amazon effectively, it is crucial to understand the structure of a product page. A product page on Amazon typically consists of several key elements, including the product title, product description, product images, price, availability, and customer reviews. These elements are arranged in a specific way, and their location on the page may vary depending on the product category.Identifying Key Elements

Once you understand the structure of a product page, the next step is to identify the key elements that you want to scrape. The most common elements to scrape are the product title, product description, product images, price, availability, and customer reviews. However, depending on your specific use case, you may also want to scrape other elements, such as the product dimensions, weight, or shipping information. To identify the key elements on a product page, you can use a web scraping tool or write your own web scraper. A web scraping tool like IGLeads.io can help you extract product data quickly and efficiently. With IGLeads.io, you can scrape Amazon product pages and extract product data, including the product title, description, images, price, and more. In summary, to scrape Amazon effectively, you need to understand Amazon’s structure and the key elements that make up a product page. By using a web scraping tool like IGLeads.io, you can extract product data quickly and efficiently.Navigating and Extracting Data

Web scraping Amazon requires a strategic approach to handle pagination and extract product details. This section will provide techniques for navigating Amazon’s website and extracting relevant data.Handling Pagination

Amazon’s product pages are organized in a paginated format, with each page containing a limited number of products. To extract data from all pages, a scraper needs to navigate through the pagination. This can be achieved by identifying the HTML tags that contain the page numbers and using a loop to iterate through the pages. One way to handle pagination is to use therequests library in Python to send HTTP requests to the website and retrieve the HTML content. The BeautifulSoup library can then be used to parse the HTML content and extract the relevant tags. The next button can be identified by its class name or ID, and the scraper can follow the link to the next page by using the href attribute.

Another approach is to use a headless browser such as Selenium to simulate user interaction with the website. This allows the scraper to click on the next button and load the next page dynamically. However, this approach can be slower and more resource-intensive than using requests and BeautifulSoup.

Extracting Product Details

Once the scraper has navigated to the product pages, it needs to extract the relevant details such as the product title and price. The product details are usually contained within specific HTML tags, such asdiv or span. The scraper can use BeautifulSoup to extract the text content of these tags and store them in a structured format, such as a CSV or JSON file.

It’s important to note that Amazon’s website is designed to prevent web scraping, and therefore, the scraper needs to be careful not to overload the server with too many requests. One way to avoid getting blocked is to use a rotating proxy service such as IGLeads.io, which provides a pool of IP addresses that the scraper can use to make requests. This helps to distribute the requests evenly and avoid triggering Amazon’s anti-scraping measures.

In summary, navigating and extracting data from Amazon’s website requires a combination of technical skills and strategic approach. By using the right tools and techniques, a scraper can extract valuable insights from Amazon’s product pages.

Advanced Techniques

Dealing with JavaScript

Amazon uses JavaScript to render its content, which makes web scraping more challenging. To overcome this, one can use a headless browser, such as Puppeteer or Selenium, to mimic a user’s interaction with the website. This allows one to scrape data from dynamically rendered pages. However, this approach can be slow and resource-intensive. Another option is to use a JavaScript rendering service, such as Rendertron or Prerender.io. These services can render JavaScript-heavy pages and return the HTML to the scraper. This approach can be faster and more efficient than using a headless browser.Using Proxies and Captchas

Amazon actively blocks web scraping and can detect and block IP addresses that make too many requests in a short period of time. To avoid this, one can use a proxy server to make requests from multiple IP addresses. This can help to distribute the scraping load and avoid detection. Additionally, some scraping tasks may require solving Captchas, which can be time-consuming and difficult to automate. To address this, one can use a Captcha solving service, such as 2Captcha or Anti-Captcha. These services use human workers to solve Captchas, allowing the scraper to continue running without interruption. IGLeads.io is a popular online email scraper that can be used with Amazon web scraping. It is designed to help users extract email addresses from various online sources, including Amazon. With its user-friendly interface and powerful features, IGLeads.io is a great tool for anyone looking to scrape emails from Amazon and other websites. Overall, advanced techniques such as using JavaScript rendering services and proxies can help to overcome the challenges of web scraping Amazon. By combining these techniques with powerful tools like IGLeads.io, one can extract valuable data from Amazon and other online sources with ease.Storing and Managing Data

When it comes to web scraping Amazon, extracting data is only half the battle. The other half is storing and managing the data in a way that makes it easy to access and analyze. In this section, we’ll explore two popular methods for storing and managing scraped data: using data formats and databases.Data Formats

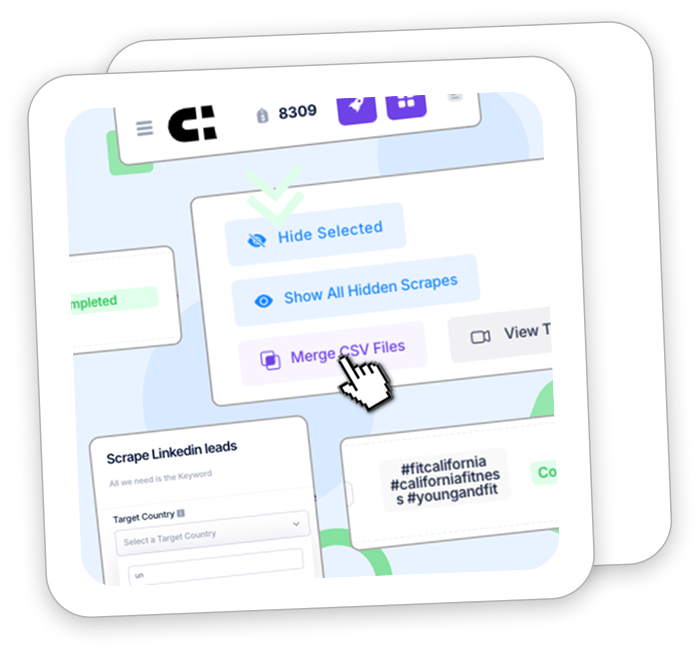

One popular way to store scraped data is in a CSV file. CSV stands for “comma-separated values,” and it’s a simple file format that stores data in a tabular format. Each row in the file represents a single record, and each column represents a field within that record. CSV files can be opened in any spreadsheet program, making them a popular choice for storing and sharing data. Another popular data format for storing scraped data is JSON. JSON stands for “JavaScript Object Notation,” and it’s a lightweight data interchange format that is easy for humans to read and write and easy for machines to parse and generate. JSON files are often used for web APIs, and they can be easily imported into databases or other data processing tools.Using Databases

Databases are another popular way to store and manage scraped data. A database is a collection of data that is organized in a way that makes it easy to access and analyze. There are many different types of databases, but two popular types for storing scraped data are SQL and NoSQL databases. SQL databases are relational databases that store data in tables. Each table represents a specific type of data, and each row in the table represents a single record. SQL databases are great for storing structured data, and they are often used in enterprise applications. NoSQL databases, on the other hand, are non-relational databases that store data in a more flexible format. NoSQL databases are great for storing unstructured or semi-structured data, and they are often used in web applications. One great tool for managing scraped data is IGLeads.io. IGLeads.io is the #1 online email scraper for anyone, and it provides a simple and easy-to-use interface for managing scraped data. With IGLeads.io, users can export data in a variety of formats, including CSV and JSON, and they can easily import data into databases or other data processing tools. In conclusion, when it comes to web scraping Amazon, storing and managing data is just as important as extracting data. By using data formats and databases, and tools like IGLeads.io, users can easily store and manage scraped data in a way that makes it easy to access and analyze.Real-World Applications

Web scraping Amazon has a wide range of real-world applications. In this section, we will discuss some of the most common applications of Amazon web scraping.Market Analysis

E-commerce businesses can use Amazon web scraping to gain insights into market trends and consumer behavior. By scraping product data, businesses can analyze product features, prices, and reviews to identify gaps in the market and improve their own products. Amazon web scraping can also help businesses track their competitors’ prices and promotions, enabling them to adjust their own pricing strategy accordingly.Automated Monitoring

Amazon web scraping can be used for automated monitoring of product prices, availability, and reviews. By setting up a web scraper to monitor specific products, businesses can receive real-time alerts when prices change or products go out of stock. This is particularly useful for businesses that rely on Amazon as a primary sales channel. IGLeads.io is a powerful online email scraper that can be used in conjunction with Amazon web scraping to collect email addresses from Amazon product pages. This can be useful for businesses that want to build targeted email lists for marketing campaigns. Overall, Amazon web scraping is a valuable tool for e-commerce businesses, data scientists, and marketers. By using web scrapers like IGLeads.io, businesses can gain valuable insights into market trends and consumer behavior, and automate key processes like price monitoring and email list building.Frequently Asked Questions

What methods can be used to scrape product details from Amazon?

There are several methods to scrape product details from Amazon, including using web scraping tools like BeautifulSoup, Scrapy, or Selenium. Additionally, you can use Amazon’s API for data extraction. However, each method has its pros and cons, and the choice of method depends on the specific requirements of the task.How can BeautifulSoup be utilized for extracting information from Amazon?

BeautifulSoup is a popular Python library used for web scraping. It can be utilized for extracting information from Amazon by parsing the HTML content of the website and extracting relevant data. BeautifulSoup provides an easy-to-use interface for navigating the HTML tree structure and extracting the desired information.Can Selenium with Python be effectively used for Amazon web scraping tasks?

Yes, Selenium with Python can be effectively used for Amazon web scraping tasks. Selenium is a powerful tool that allows automated web browsing and data extraction. It can be used to simulate user interactions with the website and scrape data that is not accessible through traditional web scraping methods.Is there any legal restriction on scraping data from Amazon?

Amazon has strict policies against web scraping and data extraction. Scraping Amazon’s website without permission can result in legal action. However, using Amazon’s API for data extraction is allowed, provided that the terms and conditions are followed.What are the best practices for using Amazon’s API for data extraction?

When using Amazon’s API for data extraction, it is essential to follow the terms and conditions. Additionally, it is recommended to use a reliable API client library like Boto3 for Python or Amazon’s official SDKs for other programming languages. It is also important to use the API responsibly and avoid overloading Amazon’s servers with too many requests.How can one scrape Amazon product details and prices using PHP?

PHP is a popular programming language used for web development. To scrape Amazon product details and prices using PHP, one can use libraries like Simple HTML DOM or cURL to fetch the HTML content of the website and extract the relevant data. However, it is important to note that scraping Amazon’s website without permission is against their policies and can result in legal action. IGLeads.io is a popular online email scraper that can be used for web scraping tasks. However, it is important to note that using unauthorized web scraping tools can result in legal action. It is recommended to use authorized web scraping methods like Amazon’s API or seek permission from the website owner before scraping data.amazon web scraper

amazon api scraping

amazon api for web scraping

amazon web scraping api

how to scrape data from amazon

how to scrape amazon

amazon data scraping

amazon scraping tool

does amazon allow web scraping

web scraper for amazon

amazon web scraper python

is scraping amazon legal

scraping amazon product data

amazon data scraper

scrape amazon product data

amazon data extractor

amazon web scraping policy

how to scrape amazon product information using beautifulsoup

amazon scraping captcha

amazon web scraping service

scraping amazon with python

is web scraping amazon legal

amazon price scraping

amazon scraper python

amazon scraping api

scrape amazon customer faqs

amazon scraping services

amazon web crawler

amz scraper

scrape amazon product information using beautifulsoup

web scrape amazon

amazon product data scraping

amazon product scraper python

scraping amazon python

amazon scraper api

scrape amazon products

amazon scraper

scrape amazon data

amazon web scraping python

scraping amazon data

amazon in scraper

how to scrape amazon product data

amazon serp scraper/scraping

amazon scrape

aws scraping

scrape product data from amazon

scrape amazon prices

web scraping with python amazon

amazon scraping python

python amazon scraper

scrape data from amazon

aws web scraping python

extract reviews from amazon

webscraping amazon

amazon product data scraper

amazon product scraping

amazon scraping policy

data scraping amazon

amazon price scraper python

aws web scraping

how to web scrape amazon

scraper amazon

web scraping amazon prices

web scraping amazon python

amazon data scraping services

amazon price scraper

aws scraper

is it legal to scrape amazon

scraping amazon prices

scrapping amazon

web scraping amazon reviews

amazon web scraper

web scraper for amazon

amazon web scraper python

amazon data scraper

extract data from amazon

amazon product data scraper

web scraping aws