Outscraper has been a go-to choice for Google Maps scraping and LinkedIn data extraction, but plenty of teams are hitting the same walls. Rigid pricing, limited platform coverage, and feature gaps that slow down real prospecting work. Here’s the truth: we tested 15 different scraping tools to see which ones actually deliver when you need clean data fast. Not another listicle […]

15 Best Lead411 Alternatives That Actually Work in 2025

Lead411 has carved out its place in the B2B data space, but plenty of sales teams are hitting the same wall: limited data quality and rigid pricing structures that don’t scale with their needs. While Lead411 offers unlimited B2B data with verified emails and direct dials, that $99 per month Basic Plus plan starts feeling […]

Lead411 Review 2025: Is It Really Worth Your Money?

Lead411 has carved out its own space in the sales intelligence market, boasting a 9.1 out of 10 rating from 69 reviews on TrustRadius. For sales teams hunting reliable contact data without the bloat of enterprise-heavy platforms, Lead411 positions itself as the “unlimited B2B data provider” that doesn’t cap your exports. But here’s where it gets interesting. With pricing starting at […]

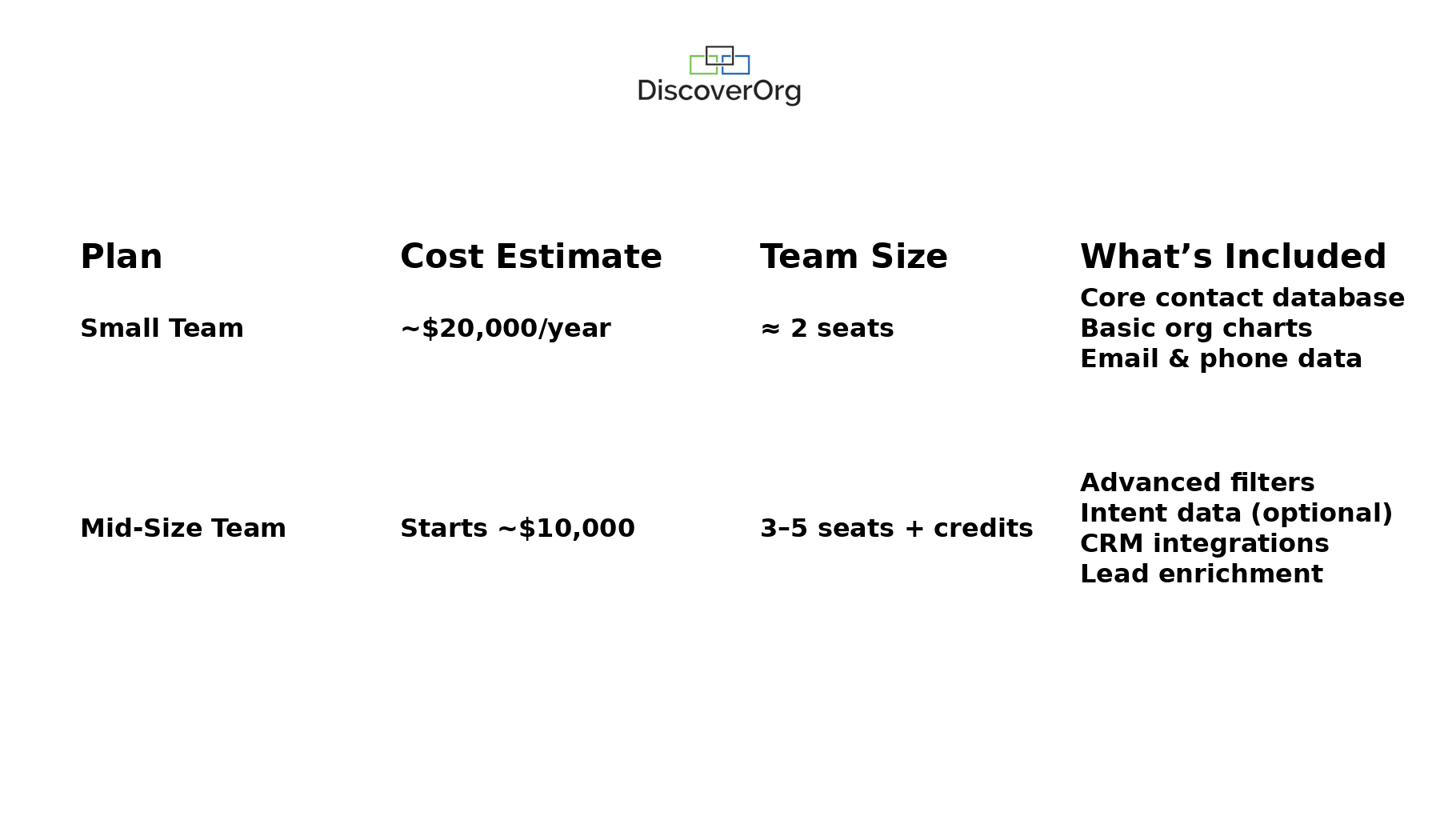

17 Best DiscoverOrg Alternatives for Sales Teams in 2025

Sales teams are actively seeking flexible B2B intelligence solutions beyond DiscoverOrg in 2025. Modern lead scraping tools are making it easier to collect verified contacts without the complexity and cost of legacy platforms. DiscoverOrg has managed to keep its leading position in sales intelligence since its merger with ZoomInfo in 2019. However, many teams now question […]

GMass Alternatives Actually Tested by Email Marketing Experts (2025)

GMass alternatives have become critical for serious email marketers hitting Gmail’s walls in 2025. GMass handles basic automation well enough, but it’s locked into Gmail’s ecosystem with hard limits that stop most teams cold. If you’re running campaigns and need to scrape emails for cold outreach, GMass alone won’t solve your top-of-funnel challenges. If you’ve […]

GMass Review 2025: The Good, Bad & Ugly After Sending 10,000 Emails

GMass is a Chrome extension that turns your Gmail into an email marketing platform without forcing you to learn another dashboard. It’s designed for teams who want to run personalized outreach campaigns directly from their existing Gmail interface, making it appealing for users who prefer familiar workflows over feature-heavy platforms. This isn’t another surface-level review […]

Lusha vs ZoomInfo in 2025: Which Sales Tool Should You Choose?

When it comes to finding leads, IGLeads’ email verifier, Lusha, and ZoomInfo offer totally different approaches. Lusha keeps it simple: fast access to phone numbers and emails with no bells and whistles. ZoomInfo? That’s the enterprise beast loaded with intent data, org charts, technographics… and a price tag that usually makes small teams back away […]

DiscoverOrg Pricing in 2025: Avoid Surprises Before You Buy

If you’ve ever tried shopping for lead databases, you know how weirdly secretive pricing can get. DiscoverOrg, now fully rolled into ZoomInfo, is a perfect example. On the surface, it promises top-notch B2B data, intent signals, and verified contacts. But when it comes to how much it actually costs? Good luck getting a straight answer […]

Outscraper Pricing Guide 2025: Is It Really Worth The Money?

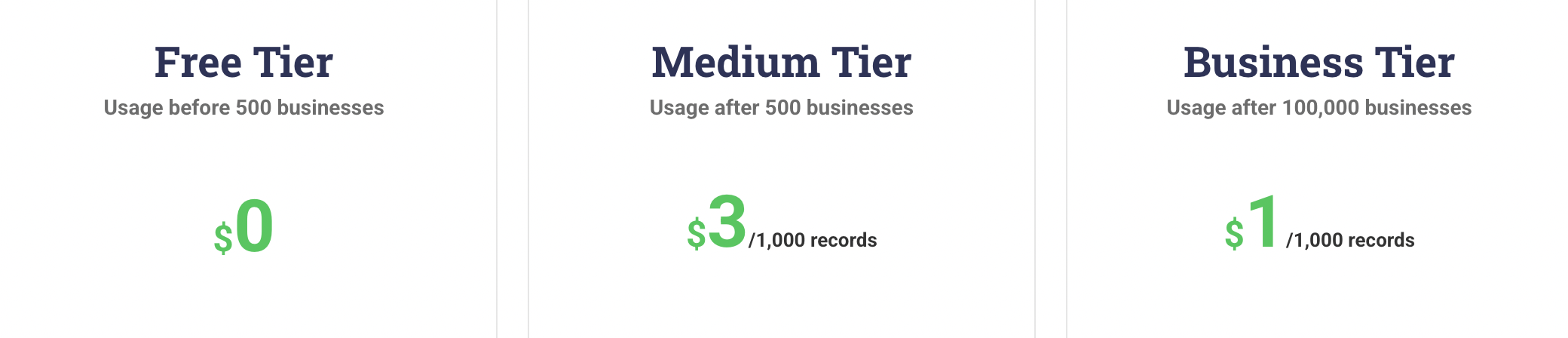

If you’ve ever tried scraping data at scale, you know how quickly pricing models turn into a maze of usage limits, credit balances, and unpredictable fees. Tools like Outscraper promise flexibility with their pay-as-you-go pricing, but once you start running jobs, the real costs aren’t always as simple as they seem. Between per-record fees, free […]

Best ParseHub Alternatives in 2025 (No-Code & Scalable Options)

Discover powerful Parsehub alternatives for efficient web scraping. Explore user-friendly tools with advanced features to streamline your data extraction process.