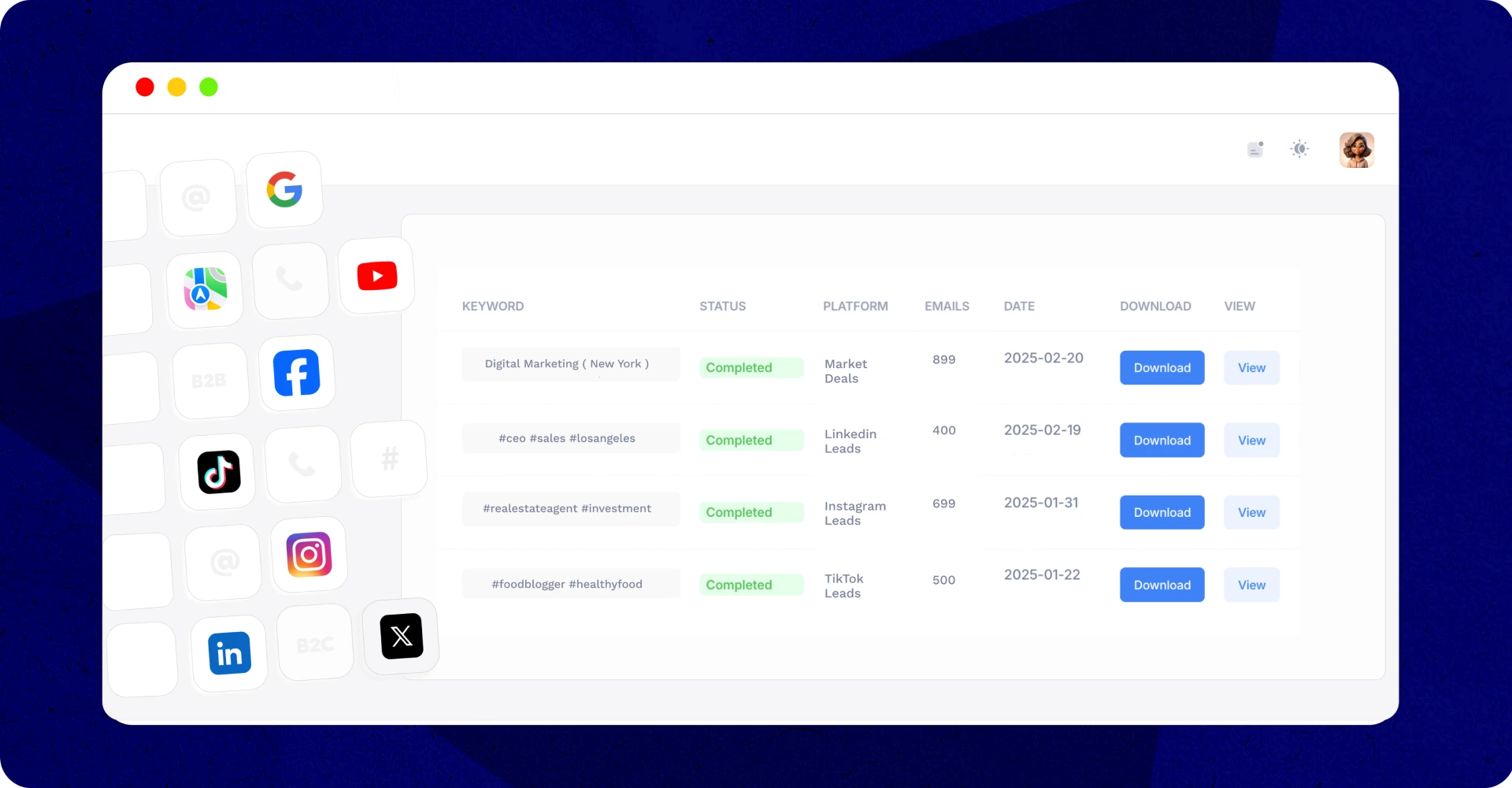

Gather Leads From Across Several Platforms

Our email scraper pulls leads from across the web. You can choose a social media platform, search a website, or Google itself.

- Looking for LinkedIn Contacts? Filter by job title, industry, and region with our LinkedIn scraper.

- Focusing on Local Businesses? Use our Google Maps scraper to pull contact details effortlessly.

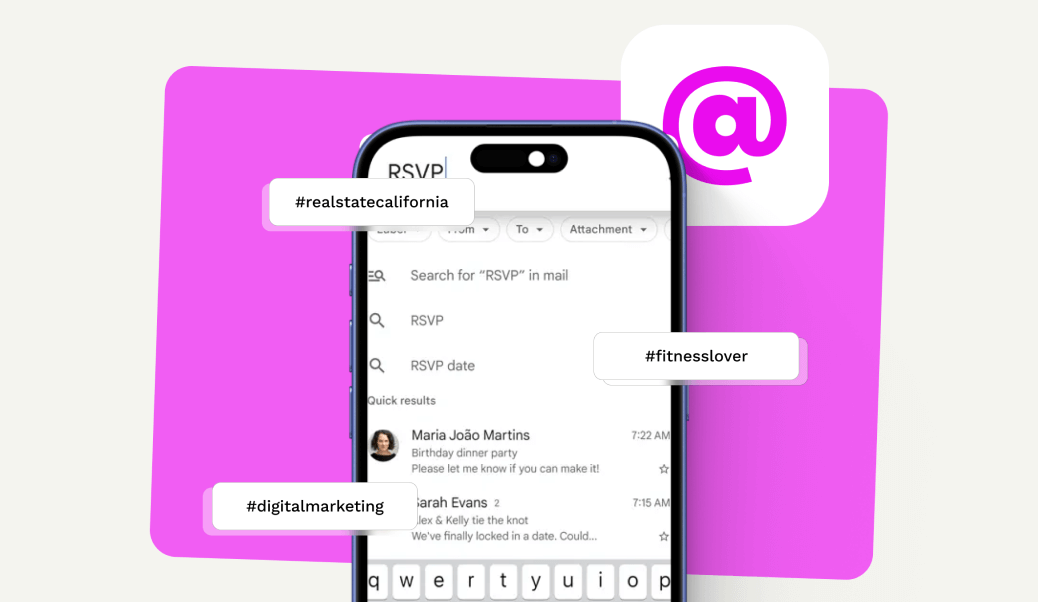

- Need Leads from Twitter? We’ll extract emails from X using targeted hashtags.

- Interested in Facebook Groups? Extract relevant contacts from FB groups of your choice.

- B2B or B2C prospecting? Find individual consumer or company emails in your market.

Any Questions?

Our email scraper collects high-quality leads from social media platforms by using targeted keywords or hashtags. It pulls relevant contact details, including emails and phone numbers, and delivers them in a clean CSV format ready for import into your CRM.

Yes, our Email Scraper is 100% legal and compliant with DMCA, CFAA, and all relevant data protection regulations. We only collect publicly available information and don’t use unauthorized access methods like fake accounts or multiple IPs.

You can collect 1000+ email contacts in just a few hours, depending on your search parameters and target platforms. Our tool is designed for high-volume lead generation to maximize your outreach efforts.

Our Email Scraper works across multiple platforms including LinkedIn (filtered by job title, industry, and region), Google Maps (for local businesses), Twitter/X (extracting emails using hashtags), Facebook groups, and various social media platforms for both B2B and B2C prospecting.

Yes, our Email Scraper allows you to target leads by specific industries and geographic regions. This targeting capability ensures you’re reaching the most relevant audience for your business offerings.

No, there are no extensions or complicated tools to install. Our email scraper is a web-based solution that works directly in your browser, making it incredibly easy to use without any technical setup.

Yes! Whether you’re looking to generate business leads (B2B) or consumer leads (B2C), our scraper allows you to target specific audiences based on your goals.

Yes! Our email scraper is fully compliant with data protection regulations, including DMCA and CFAA. We only collect publicly available data from social media platforms, ensuring full compliance with the law.

You can generate thousands of leads in just a few hours. Our email scraper is designed for quick and efficient lead collection, so you can focus on growing your business without delay.

Getting started is easy! Simply sign up for IGLeads, choose the platform you want to scrape from, enter your targeted keywords or hashtags, and click “Start Scraping.” And then sit back as we scrape platforms to bring you thousands of contacts.