ParseHub Review 2025: The Truth About This Web Scraping Tool

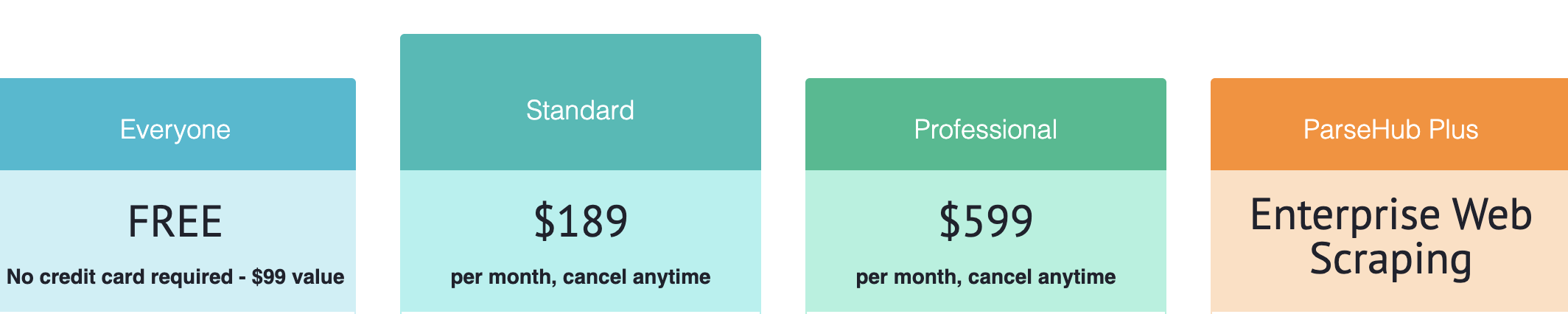

ParseHub gets marketed as a free web scraper, but that’s only half the story. While it does offer a free plan with basic functionality, most users quickly realize that serious scraping requires one of their paid plans, starting at $189 per month and going up to $599 monthly for the Professional tier, plus custom enterprise pricing.

If you’re comparing scraping tools, it’s important to understand the difference between general-purpose scrapers like ParseHub and specialized lead generation platforms like IGLeads’ email scraper. While ParseHub focuses on scraping any website, IGLeads is designed to quickly pull leads from platforms like Google Maps, Instagram, LinkedIn, and YouTube, without page limits or complex setups.

ParseHub is a desktop-based web scraping tool built to make data extraction accessible without coding. The free version (valued at $99) handles up to 200 pages in 40 minutes per run. Move to the paid tiers, and speed improves dramatically: Standard processes those same 200 pages in 10 minutes, while Professional cuts it down to under 2 minutes.

✅ Pros

- Handles complex websites with JavaScript, infinite scroll, and dynamic content

- Point-and-click interface for no-code scraping setup

- Cloud scraping available on paid plans (no need to keep your computer on)

- API access for automated workflows and integrations

- Supports multi-page navigation, dropdowns, and login-protected scraping

- Compatible with Windows, Mac, and Linux

❌ Cons

- Steep learning curve despite “no-code” claims

- Desktop app required on the Free and Standard plans

- Page and project limits make scaling expensive

- Not ideal for scraping social media or search result-driven platforms

- Pricing jumps quickly: limited flexibility between tiers

- Occasional errors or failed runs on highly dynamic or protected websites

Our Rating Breakdown

- Contact Data Accuracy: ⭐⭐⭐

- Website Compatibility: ⭐⭐⭐⭐

- CRM Integration: ⭐⭐

- Email Automation: ❌ (Not Available)

- Data Enrichment: ⭐⭐

- Chrome Extension: ❌ (Desktop app only)

- Pricing Transparency: ⭐⭐

This isn’t another surface-level review full of feature screenshots and marketing fluff. You’ll get an honest look at ParseHub’s real-world performance, pricing, limitations, and whether it holds up in 2025, or if tools like IGLeads are a better fit for your scraping and lead generation needs.

What is ParseHub and How Does It Work?

ParseHub is a point-and-click web scraper that lets you grab data from websites without touching a single line of code. Instead of messing around with Python scripts or complicated APIs, you just click on the stuff you want, like product names, prices, addresses, reviews, or tables, and ParseHub turns it into clean data you can download as CSV, Excel, or JSON.

Under the hood, ParseHub is basically a powerful data extraction machine. It loads the website, grabs the page’s code (HTML, JSON, or whatever’s powering it), figures out what you’ve asked it to scrape, and organizes everything into a neat, structured format you can export, or even send straight to an app using their API.

What makes ParseHub stand out from simple browser extensions is that it handles the tough stuff. It works on complex websites with JavaScript, infinite scrolling, dropdown menus, pop-ups, pagination, and even pages that require you to log in before seeing the data.

Overview of ParseHub’s Core Functionality

Here are the standout features ParseHub offers in 2025, and how well they actually work depending on what you’re trying to scrape:

Point-and-Click Interface ⭐⭐⭐⭐

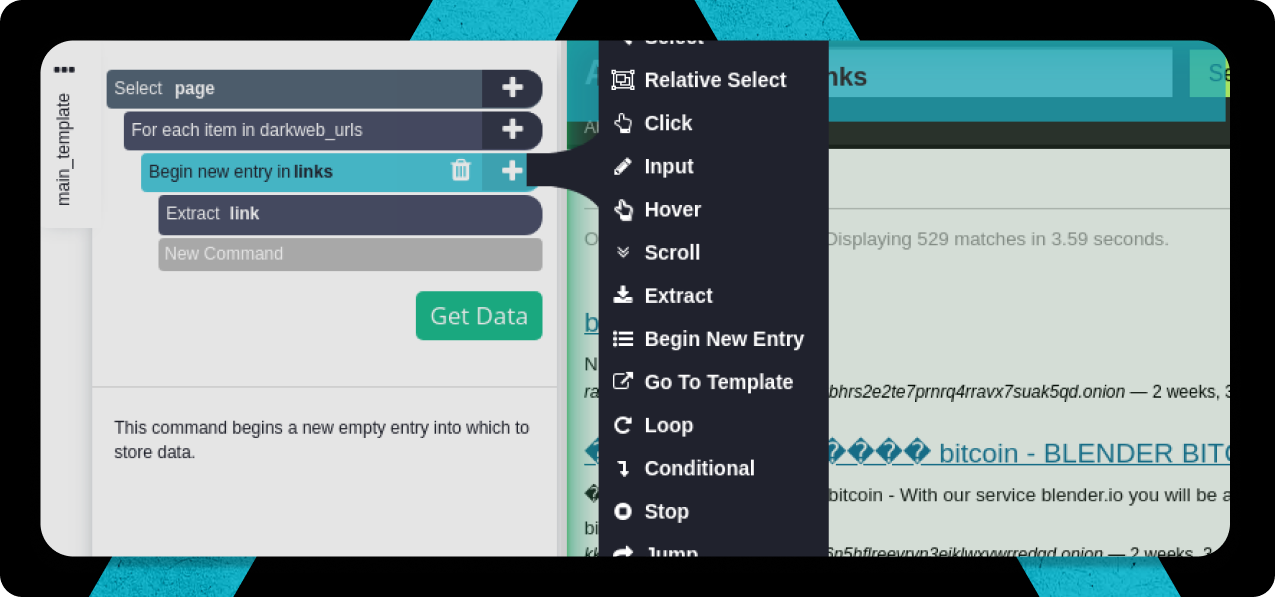

ParseHub’s biggest selling point is its visual scraper builder. When you start a new project, the interface loads the website right inside the app. You just hover over elements, like prices, names, descriptions, or images, and click to select them. ParseHub is smart enough to detect patterns across similar items on the page, automatically grouping them into a data set.

For simple websites, this process feels quick and intuitive. But as soon as the page layout gets more complicated (nested divs, inconsistent HTML structure, hidden elements), the learning curve starts to show. You may need to manually adjust XPath selectors, use the “Relative Select” tool, or troubleshoot why ParseHub isn’t capturing everything perfectly.

In short, the point-and-click interface makes scraping accessible for non-developers, but it’s not entirely foolproof for messy websites.

✅ Works great for: Lists, tables, product grids, straightforward directories.

⚠️ Requires tweaking for: Complex websites with inconsistent structures.

Dynamic Website Support ⭐⭐⭐⭐

One of ParseHub’s strongest features is its ability to handle dynamic websites, those that load content with JavaScript, AJAX, or infinite scroll. Where simpler scrapers often fail, ParseHub can mimic human interactions. It clicks buttons, opens dropdowns, scrolls pages, and waits for dynamic content to load before extracting the data.

You can program ParseHub to wait for elements to appear, handle pop-ups, submit forms, or click “Load More” buttons. This is crucial for scraping modern websites like ecommerce catalogs, job boards, or review platforms.

However, the trade-off is speed. Because it simulates browser actions, scrapes can take a lot longer than with API-based tools or scrapers focused on static content. There’s also a higher risk of scripts breaking if the website layout changes frequently.

✅ Excellent for: JavaScript-heavy sites, dynamic pages, infinite scroll.

⚠️ Slower than API scrapers; can break when websites update their frontend code.

Multi-Page Navigation ⭐⭐⭐⭐

Multi-page scraping is where ParseHub really shines, when configured correctly. You can easily set it to follow “Next” buttons, crawl through paginated listings, and gather data from every page in a sequence.

ParseHub detects pagination links or buttons during setup and allows you to add “Click” commands to follow them. You can also teach it to capture data from pages linked within listings, for example, collecting summary info from a product list and then visiting each product page for full details.

That said, if pagination involves infinite scrolling instead of buttons, setup becomes trickier. You’ll need to script scroll actions and set appropriate wait times.

✅ Reliable for: Standard paginated lists with “Next” buttons or numbered pages.

⚠️ Needs manual setup for: Infinite scroll or dynamically loaded pagination.

Login-Protected Content Extraction ⭐⭐⭐⭐

ParseHub does a solid job scraping websites that require login. You log into the site manually through ParseHub’s built-in browser, and it saves the session cookies to maintain access while scraping.

This means you can scrape data behind paywalls, dashboards, membership-only sections, or admin panels, as long as the site doesn’t have aggressive anti-bot detection.

Limitations come into play when dealing with sites that require 2FA (two-factor authentication) or frequently expire session tokens. Additionally, if the site has heavy bot detection, like LinkedIn or Instagram, ParseHub may get blocked quickly.

✅ Great for: Password-protected dashboards, directories, or internal tools.

⚠️ Risky for: Platforms with strict anti-bot policies or short session lifespans.

Data Export Options ⭐⭐⭐⭐

Once your scrape is complete, ParseHub gives you several options to export your data:

- CSV

- Excel

- JSON

- Google Sheets (via third-party integrations)

- Or stream data directly via the REST API

The exports are clean and well-structured based on the selections you configured. CSV and JSON work perfectly for spreadsheets, dashboards, or feeding into databases. If you need live syncing (like automatically updating a Google Sheet), it requires connecting via the API or an automation tool like Make or Zapier.

The only missing piece here is the lack of built-in one-click integrations for CRMs or marketing tools, something that platforms like IGLeads handle more directly.

✅ Perfect for: Clean, structured spreadsheet or JSON data.

⚠️ Manual or API-based steps needed for live integrations with CRMs or apps.

Scheduling and Automation ⭐⭐⭐

ParseHub lets you schedule scraping tasks to run on autopilot, but only on the paid Standard plan and above. You can set tasks to run daily, weekly, monthly, or at custom intervals.

Automated runs happen on ParseHub’s cloud servers, so your computer doesn’t need to stay on. This is great for use cases like price monitoring, stock tracking, or checking job listings.

However, the scheduling interface feels basic compared to dedicated scraping automation tools. There’s no error handling, alert system, or built-in retries if something breaks. If the website layout changes, your scheduled tasks will fail silently until you notice.

✅ Useful for: Routine data collection without manual effort.

⚠️ Basic scheduling: no monitoring or error handling features.

API Access ⭐⭐⭐⭐

For advanced users, ParseHub offers a REST API that lets you trigger scraping tasks, check status, and download results programmatically. This is crucial for anyone wanting to plug scraped data into automated workflows, like pushing contacts to a CRM, syncing price data to a dashboard, or triggering updates in real time.

The API is fairly well-documented and straightforward. You can authenticate, start runs, check progress, and fetch the output files in JSON or CSV. However, API usage is locked behind the paid plans, and it lacks native SDKs or advanced queue management that enterprise-grade scrapers might offer.

✅ Strong for: Developers or businesses wanting scraping integrated into apps or workflows.

⚠️ Requires technical know-how to set up; no SDKs, only REST API calls.

Supported Platforms and System Requirements

ParseHub runs as a desktop application for Windows, Mac, and Linux. This differentiates it from purely cloud-based scrapers but also introduces some limitations. The desktop app must be running for scrapes unless you’re on a paid plan with cloud scraping enabled.

Minimum Requirements:

- Windows: Windows 7, 8, or 10 (64-bit); Pentium 4 processor with SSE2 support; 512MB RAM; 200MB free disk space

- Mac: macOS 10.15+; Intel x86 processor; 512MB RAM; 200MB free disk space

- Linux: Requires GTK+ 3.4+, GLib 2.22+, Pango 1.14+, and X.Org 1.0+

If you’re using the free or Standard plan, scraping happens locally on your computer. On the Professional plan or higher, scraping tasks move to the cloud, meaning your computer doesn’t need to be turned on.

Is ParseHub Safe to Use?

Yes. ParseHub is considered safe from both a technical and privacy perspective. All data transfers between the ParseHub app (or cloud servers) and your device are encrypted via HTTPS, ensuring security during data transit.

In addition, ParseHub offers:

- IP Rotation: Helps prevent scraping bans by rotating IP addresses when making multiple requests.

- Throttle Control: Users can slow down scraping speed to avoid detection.

- Session Login Security: You manually log into websites within ParseHub’s browser, but credentials are not stored beyond the session unless you choose to save them locally.

Important: “Safe” refers to data privacy and app security. It doesn’t mean all scraping targets are legally safe. Users are responsible for complying with each website’s terms of service, scraping policies, and local laws.

How to Sign Up and Get Started with ParseHub

Starting with ParseHub is straightforward but comes with the first surprise. It’s a downloadable desktop app rather than a web-based platform.

Steps to Sign Up:

- Visit ParseHub’s website and download the desktop app for your OS.

- Install the app and open it.

- On the login screen, click “Sign up” to create a free account.

- After account creation, click “New Project”, enter the URL of the site you want to scrape, and the built-in browser will load it.

- Begin selecting elements to extract using the visual interface.

Once configured, you can either run the scrape immediately from your desktop or, on paid plans, deploy it to ParseHub’s cloud servers.

CRM Integration and Automation

ParseHub doesn’t offer native CRM integrations like some lead generation platforms. However, it supports automation through third-party workflow tools.

Integration Options:

- Zapier: While ParseHub doesn’t have a direct Zapier app, you can connect ParseHub’s API to Zapier via webhooks. This enables automatic data transfers into CRMs like HubSpot, Salesforce, Pipedrive, and Zoho.

- n8n.io: An open-source automation tool that supports API-based connections with ParseHub and hundreds of apps. This is more developer-friendly but flexible and free.

- Make (formerly Integromat): Supports webhook-based automations for pushing ParseHub data into Google Sheets, Slack, Airtable, and CRMs.

Limitations:

- There’s no built-in CRM sync button. You must use API keys, webhooks, or exports.

- Compared to tools like IGLeads’ email scraper, which natively pull social or local business data directly into lead formats, ParseHub’s CRM workflow is manual or API-driven.

Bottom line: ParseHub offers robust scraping power, particularly for dynamic websites that cheaper tools can’t handle. Its point-and-click system lowers the barrier to entry, but a learning curve remains, especially for multi-step workflows. The fact that it runs as a desktop app (unless on higher paid plans) is both a strength for flexibility and a limitation for scalability.

For structured website data, ecommerce listings, job boards, directories, ParseHub shines. But for prospecting on platforms like Instagram, LinkedIn, or Google Maps, tools like IGLeads are far more efficient, faster to set up, and require zero technical maintenance.

ParseHub pricing: is it worth the cost?

ParseHub’s pricing is built around page limits, processing speed, and cloud vs. desktop execution. While it’s advertised as having a free plan, serious scraping quickly pushes most users into one of their paid plans. Unlike tools that use credit-based systems or flat-rate models, ParseHub’s pricing scales based on the number of pages you scrape, how quickly you need it done, and whether you want to run tasks in the cloud or locally on your computer.

Free, Standard, Professional, and ParseHub Plus Plans Explained

Free Plan: Good for Testing, Not Production

The free plan is technically generous but designed more for trying the tool than doing real scraping work. You get:

- 200 pages per run

- 5 public projects (data is visible to others)

- 40 minutes max processing time per run

- Local desktop scraping only (no cloud)

- No scheduling

It’s useful for one-off tasks or testing how ParseHub works, but the limitations become obvious fast. If your scrape involves multiple pages, slow page loading, or dynamic content, the 40-minute cap becomes a bottleneck.

Standard Plan: Starts at $189/month ($155/month quarterly)

This is the first real “business-ready” tier. The Standard plan upgrades your scraping from desktop to cloud, with faster processing and more capacity:

- 10,000 pages per run

- 20 private projects

- Cloud scraping (no need to keep your computer on)

- 10-minute processing time for 200 pages (vs. 40 mins on Free)

- API access for automation

- IP rotation included

- Schedule scraping tasks (daily, weekly, or custom)

- Dropbox and Amazon S3 integrations for data delivery

For small teams, this is often the sweet spot until you start running larger scraping jobs. The page limit might sound like a lot, but high-volume users can still hit it faster than expected.

Professional Plan: $599/month ($505/month quarterly)

The Professional plan unlocks much bigger capacities and significantly faster speeds. It’s designed for teams scraping thousands of pages regularly. You get:

- 50,000 pages per run

- 120 private projects

- Cloud scraping with priority processing

- Under 2-minute processing time for 200 pages

- 30-day data retention (vs. 14 on Standard)

- API access with higher rate limits

- Priority customer support

- Unlimited scheduling

- IP rotation, Dropbox, and Amazon S3 support

It’s a huge jump in price compared to Standard, but for users scraping at scale, the extra speed and page limits can be the difference between success and constant troubleshooting.

Enterprise (ParseHub Plus): Custom Pricing

For companies that don’t want to manage scrapers themselves, ParseHub offers a done-for-you enterprise solution. Features include:

- Fully managed scraping services

- Dedicated account manager

- Custom feature development if needed

- SLAs, priority uptime, and premium support

- Unlimited page limits and resources based on contract

This plan is for organizations that need guaranteed data pipelines without worrying about configuring projects, managing errors, or scaling scrapers themselves.

The Catch: Page Limits Add Up Fast

ParseHub’s pricing sounds simple at first. The page limits per run, not per month. But here’s the catch: if your scraping job involves hundreds of product listings, directories, or multi-page navigation, the page counts rack up quickly.

For example, if you’re scraping 1,000 business listings with 5 details pages each, that’s 6,000 pages in a single run. Do that 10 times a month, and even the Professional plan starts to look small.

Bottom line: ParseHub works well for structured web data, but the pricing model favors users who run large scrapes occasionally, not those who need high-frequency or continuous scraping like lead generation from social platforms.

IGLeads Pricing Overview

If you’ve ever searched something like “ParseHub review Reddit,” you’ll notice the same thing keeps coming up, the price. ParseHub’s page-based model looks simple on the surface, but it can get expensive fast if you’re running big scraping jobs or don’t set things up perfectly.

That’s where IGLeads feels like a breath of fresh air. It runs on flat, predictable pricing: no page limits, no waiting around for tasks to finish, and no surprise costs because you accidentally blew through your page allowance. For anyone tired of juggling page counts or worrying about how long a scrape is going to take, IGLeads is just… easier.

Bottom line? ParseHub’s pricing can make sense if you’ve got very specific scraping needs, you’re good at optimizing your projects, and you don’t mind a little technical tinkering. But if all you really want is to pull data fast, without dealing with page limits, cloud processing rules, or speed throttles, IGLeads is a simpler (and often cheaper) way to get it done.

What users say about ParseHub: reviews and feedback

Real user feedback often tells a different story than what ParseHub’s marketing claims. After digging through dozens of reviews across G2, Capterra, Reddit, and Trustpilot, one thing becomes obvious: how much you like ParseHub depends heavily on your technical skills and how complex your scraping projects are.

ParseHub Reviews Summary

| Pros | Cons |

|---|---|

| ✅ Handles JavaScript-heavy websites, dynamic content, and pagination | ⚠️ Steep learning curve for users expecting true point-and-click simplicity |

| ✅ Cloud-based scraping available on paid plans | ⚠️ Pricing jumps fast with page limits and larger projects |

| ✅ Supports login-protected scraping for dashboards or member areas | ⚠️ Desktop app required unless you’re on a higher-tier cloud plan |

| ✅ Flexible data exports: CSV, Excel, JSON, or API | ⚠️ No native CRM or Google Sheets integrations: API setup required |

| ✅ Can handle multi-step navigation like clicking buttons, forms, or dropdowns | ⚠️ Occasional errors when websites update their frontend or use aggressive anti-bot systems |

G2 and Capterra vs. Trustpilot: Different Expectations, Different Results

ParseHub scores 4.4–4.6 stars on G2 and Capterra, with most reviews coming from developers, data analysts, and technically inclined users.

But on Trustpilot, things look a bit different: the rating drops to just 2.9 stars.

“ParseHub makes the dirty work, all the things that you used to do manually to collect the information on a website, now is automatic with this solution, the best for bulk information collectionReview collected by and hosted on G2.com. I think ParseHub needs to be a little bit more intuivite, a little bit more more user friendly, some times the steps are redundant and you need to do everything all over again”. – Ricardo AA, Graphic Designer.

This isn’t random. Reviews on G2 and Capterra come from people expecting a technical tool. Trustpilot tends to attract more general users who expect true plug-and-play simplicity, and ParseHub isn’t that.

Reddit Reality Check

Jump into Reddit’s web scraping communities and you’ll find brutally honest takes. Common themes?

Frustration with page limits when scraping large websites, and occasional headaches with ParseHub’s point-and-click builder breaking if the website structure changes. Users frequently discuss whether it’s worth upgrading to the Professional plan just to avoid cloud restrictions and longer wait times.

“ParseHub is great when it works… until it doesn’t. One site update and your whole scraper crashes.”

The consensus? ParseHub is powerful, but it rewards users who are willing to troubleshoot and fine-tune their scrapers. If you’re expecting 100% no-code, hands-off simplicity, expect a reality check.

Review Platform Breakdown

| Platform | Rating | Reviews |

|---|---|---|

| G2 | 4.4/5 stars | 60+ reviews |

| Capterra | 4.6/5 stars | 50+ reviews |

| Trustpilot | 2.9/5 stars | 40+ reviews |

What Users Actually Love (and Hate)

What users love:

- Scrapes complex websites reliably — including JavaScript, infinite scroll, and login-protected pages

- Powerful when set up correctly — handles big scraping projects with lots of navigation

- Flexible data output — CSV, Excel, JSON, API integration

- Great for developers and data teams who don’t mind tweaking selectors or troubleshooting

“The tool helps is collecting the data from any page of pages (Up to 200 pages for free version) and get you the defined data on .CSV or JSON which can be used for creating any database or dashboards. This has helped me save so much of manual work and time as Parsehub did it in minutes” – Daniel O., Marketing Manager

Common complaints:

- Not truly no-code — “You’ll probably need to learn XPath or use the advanced selector features”

- Page limits and processing caps — “Scaling gets expensive fast”

- Desktop dependency on lower plans — “Have to leave my laptop running for hours unless I pay for cloud”

- Vulnerable to website updates — “Scrapers break when the page structure changes”

“I was not able to publish the compete scarped output. The output is very limited” – Preet N., Program Manager.

Bottom line: Most negative reviews boil down to the same thing: expecting plug-and-play scraping and getting a tool that still needs technical finesse. ParseHub is absolutely capable, but it’s better suited for users willing to fine-tune workflows rather than beginners looking for instant, hands-off results.

What Works (And What Doesn’t) About ParseHub

After testing ParseHub across real-world scraping projects, here’s an honest look at where the platform delivers, and where it can frustrate users.

“ParseHub is a solid tool for scraping complex websites that other scrapers can’t handle. But if you think it’s fully no-code, you’re in for a surprise. Be ready to troubleshoot selectors and workflows when things break.” – Julia P., Marketing Analyst

Scalability & Complex Website Scraping ⭐⭐⭐

ParseHub’s biggest strength is handling complex websites, pages with JavaScript, infinite scroll, dropdown menus, pop-ups, or login walls. It’s one of the few scrapers in this price range that can reliably handle dynamically loaded websites without writing any code (at least most of the time).

That said, scalability has limits. ParseHub’s pricing model is based on page limits per run, not per month. Scraping a large directory with thousands of listings and nested pages can chew through your limits fast. If you’re running frequent or very large scrapes, expect to upgrade to the Professional plan quickly, and even then, you may hit ceilings.

Unlike true enterprise-grade scraping tools, ParseHub isn’t built for massive-scale web scraping at unlimited speeds. It’s great for complex, structured projects but not ideal for high-frequency scraping at scale.

Point-and-Click Interface & Automation ⭐⭐⭐⭐

What I like: The point-and-click interface is ParseHub’s core appeal. It feels intuitive, select an element, build a selection pattern, click next. It handles websites that would break simpler scrapers like Octoparse or Instant Data Scraper.

Even better, it supports tasks like pagination, clicking through links, handling buttons, and even scraping behind login forms. You can automate recurring tasks with the paid plans using cloud-based scheduling.

But here’s the reality check: While ParseHub sells itself as no-code, the more complex your scrape, the more you’ll end up using XPath selectors, RegEx filters, or troubleshooting why ParseHub didn’t grab what you expected. It’s far from true plug-and-play simplicity.

✅ Pros:

- Cloud scraping runs even when your computer is off (Standard plan and above)

- Handles JavaScript-heavy websites, infinite scroll, dropdowns, forms, and pop-ups

- Can scrape login-protected websites with session cookies

- Supports multi-page navigation and nested page scraping (parent/child pages)

- Clean data exports to CSV, Excel, JSON, or via API

❌ Cons:

- Page limits per run — high-volume scraping gets expensive quickly

- Desktop app required for Free and Standard plans (cloud only on higher tiers)

- Not truly no-code — XPath, RegEx, and selector tweaking is often necessary

- Vulnerable to site structure changes — a small HTML update can break your scraper

- No native CRM or Google Sheets integrations; API setup is required for automation

“The support the only thing tried to do was selling, without even allowing me to actually experience the software, as it would output an empty file due to me just evaluating and not paying. What would you want me to evaluate if I cannot actually use it!” – Mark V., Managing Director

Who Should Use ParseHub

Perfect for: Marketers, data analysts, researchers, and small businesses who need reliable scraping of complex websites, including login-protected pages, JavaScript-heavy content, and multi-page navigation. If you don’t mind tweaking selectors or learning how XPath works, ParseHub delivers a lot of power without needing to write code from scratch.

Not ideal for: Beginners looking for true plug-and-play scraping. If you don’t want to deal with page limits, desktop scraping setups, or figuring out why your scraper broke after a website update, a tool like IGLeads is a much simpler flat-rate alternative for scraping leads from platforms like Google Maps, Instagram, and LinkedIn with zero selector setup.

Bottom line: ParseHub is excellent for users who want flexible, complex web scraping without writing full code. But it’s not “set it and forget it”. It rewards users who are willing to troubleshoot and fine-tune workflows. If you’re expecting a fully no-code, hands-off tool, ParseHub will likely frustrate you. If you’re willing to get under the hood a bit, it’s one of the most capable scrapers in its price range.

ParseHub vs IGLeads: which tool fits your workflow?

Reading ParseHub reviews only gives you part of the picture. When you’re choosing between web scraping tools, you need to understand how each platform actually fits your specific data needs. ParseHub takes a broad approach to web scraping, while IGLeads carved out a specialized niche.

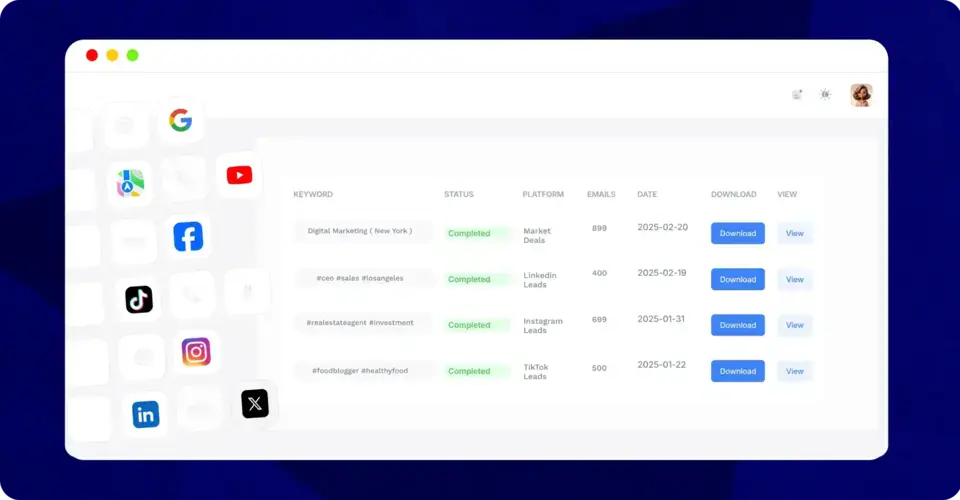

What IGLeads does differently

IGLeads specializes in social media data extraction, particularly from platforms like Instagram, LinkedIn, and Google Maps. Where ParseHub serves as a general-purpose scraper for any website, IGLeads focuses on pulling targeted leads from social networks and local business directories.

This specialization makes IGLeads particularly effective for:

- Marketing agencies running campaigns for local businesses

- Real estate agents scraping property listings and homeowner data

- Sales teams building prospect lists from Instagram or LinkedIn

- Growth marketers targeting influencers and creators

IGLeads cuts through the complexity by focusing on what many teams actually need: fresh, targeted contact data from platforms that traditional scrapers can’t easily access.

Feature comparison: ParseHub vs IGLeads

| Feature |  |

|

|---|---|---|

| Learning curve | ⚠️ Steep (1-3 hours per project) | ✅ Simple (minutes to set up) |

| Data sources | ✅ Any website | ✅ Instagram, LinkedIn, Google Maps, YouTube |

| Pricing simplicity | ❌ Complex tiers ($149-$499/month) | ✅ Flat-rate plans (starting $49/month – anual billing) |

| Technical setup | ⚠️ Desktop app required | ✅ Web-based dashboard |

| Scheduling | ✅ Advanced automation | ✅ Basic scheduling available |

| Enterprise features | ✅ Custom solutions available | ❌ Focused on SMB needs |

When to choose ParseHub vs IGLeads

Choose ParseHub if you need:

- Complex scraping across diverse website types

- Advanced automation and scheduling features

- Enterprise-level data extraction projects

- Integration with existing technical workflows

Choose IGLeads if you want:

- Fast, targeted lead generation from social platforms

- Simple setup without technical complexity

- Transparent pricing without contract negotiations

- Fresh contact data from platforms ParseHub can’t easily access

How teams actually use both tools

Here’s the reality: many data-driven teams don’t see this as an either-or decision. ParseHub handles broad web scraping needs, while IGLeads fills the gap for social media and local business data.

For example, a marketing agency might use ParseHub to scrape competitor pricing from e-commerce sites, then switch to IGLeads to build targeted Instagram influencer lists for client campaigns. The tools complement each other rather than compete.

Bottom line: ParseHub is powerful for general web scraping, but requires technical patience. IGLeads delivers faster results for social media and local lead generation, but won’t replace a full-featured scraping platform.

If you’re testing fast, targeting social platforms, or need simple lead generation without the overhead, IGLeads gets you there with less friction.

Still unsure? Try IGLeads for free and see how it compares to ParseHub’s complexity for your specific use case.

Final Verdict: Is ParseHub Worth It?

Bottom line? ParseHub is a powerful tool if you need to scrape complex websites, especially those with JavaScript, infinite scroll, or login walls. It’s one of the most capable visual scrapers out there for non-coders who don’t mind tweaking selectors and getting into the details of how websites are structured.

But it’s not for everyone. If you’re looking for true plug-and-play simplicity, or if you need to scrape social platforms like Instagram, LinkedIn, or Google Maps without dealing with page limits, desktop apps, or technical troubleshooting. ParseHub will probably feel frustrating fast.

For those cases, tools like IGLeads offer a far simpler approach. It’s flat-rate, fully cloud-based, and purpose-built for lead scraping from social media and local business directories, without the complexity.

Final recommendation: ParseHub is great for developers, data analysts, and marketers dealing with structured websites that most scrapers can’t handle. But if your focus is lead generation from platforms like LinkedIn, Instagram, or Google Maps, skip the headaches and try IGLeads instead.

Related to Parsehub

- Best ParseHub Alternatives in 2025 (No-Code & Scalable Options)

- ParseHub Pricing Guide 2025: Is The Free Plan Worth It?

Frequently Asked Questions

ParseHub does have a free plan, but it’s mostly for testing. You’re limited to 200 pages per run, slower processing, and public projects only. For anything serious, you’ll need to upgrade to a paid plan.

Yes, that’s actually one of ParseHub’s biggest strengths. It can handle JavaScript-heavy websites, infinite scrolling, pop-ups, dropdowns, and multi-step navigation. But you’ll still need to set up the scraper carefully to handle those elements properly.

Sort of. The point-and-click interface makes basic scraping pretty approachable. But as soon as websites get complex, you’ll likely deal with XPath selectors, RegEx, or troubleshooting why certain elements didn’t scrape. It’s not 100% no-code if that’s what you’re expecting.

Yes. ParseHub’s API lets you start scraping tasks, monitor progress, and pull data directly into apps, databases, or CRMs. However, API access is only available on paid plans, and it requires a bit of technical setup.

If you’re scraping websites like ecommerce stores, directories, or data-rich pages, ParseHub is solid. But if your goal is lead scraping from platforms like Instagram, LinkedIn, or Google Maps, without worrying about page limits or selectors, IGLeads is a faster, simpler alternative with flat-rate pricing.