Unlock Leads from 11+ Platforms with Ease

Our all-in-one scraper streamlines lead generation by pulling valuable contacts from multiple sources effortlessly.

- Instagram – Extract emails and user data through targeted hashtags for pinpoint prospecting.

- LinkedIn – Discover leads by job title, industry, and location to connect with key professionals.

- Google Maps – Use our Google Maps scraper to gather contact details for precision outreach.

- B2B Prospecting – Access company emails to connect directly with decision-makers.

- Twitter – Identify potential leads from industry conversations and trending discussions.

Any Questions?

Find the contacts you need instantly—fast, simple, and effortless.

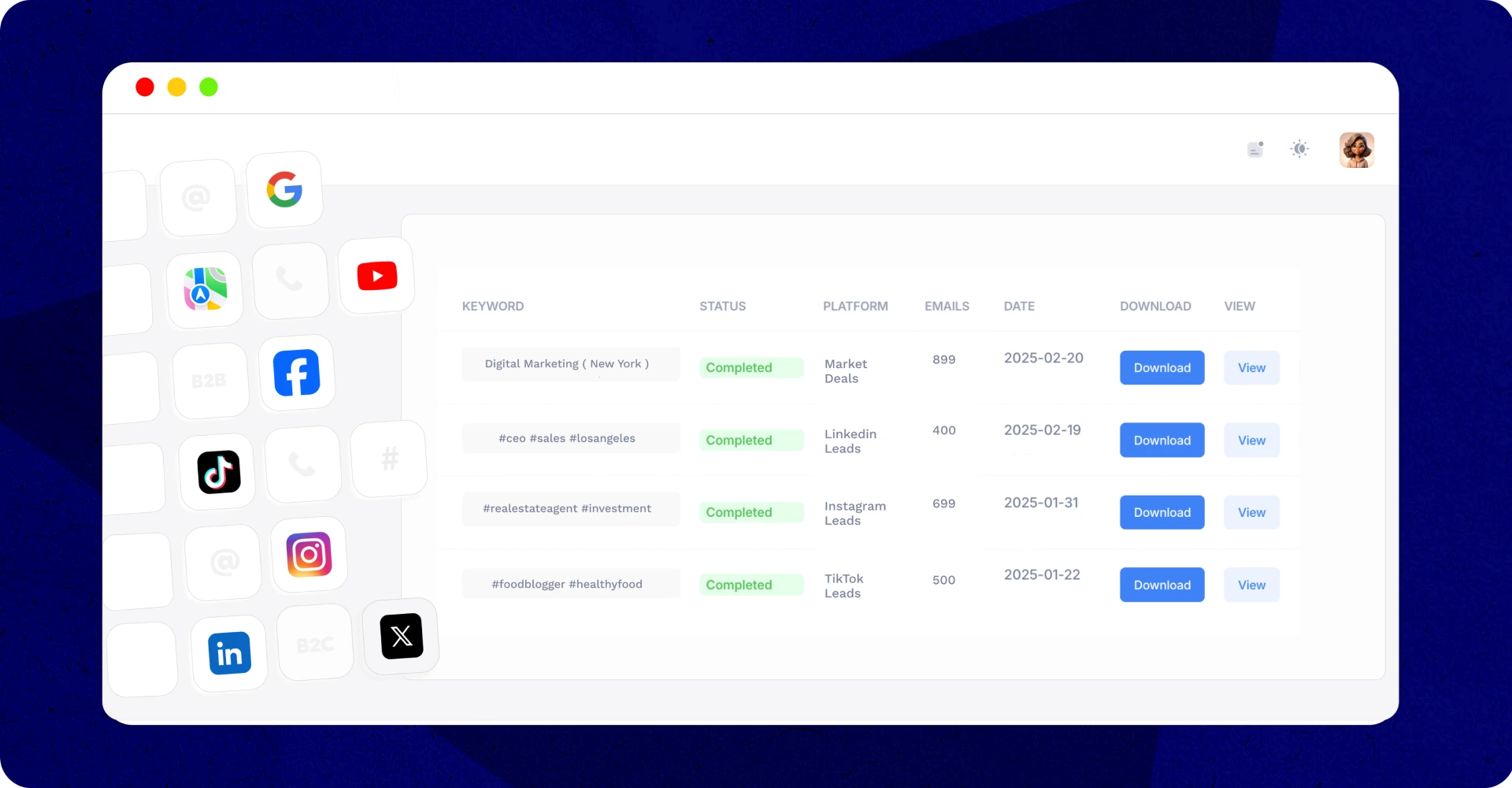

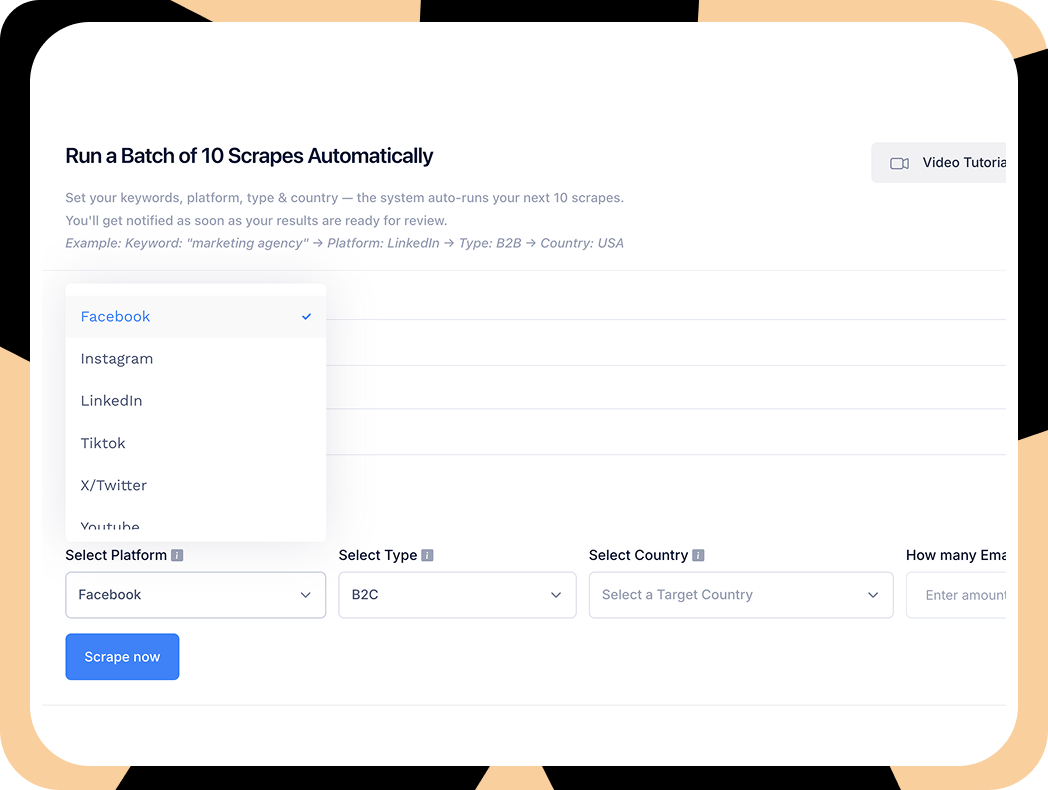

IGLeads is a powerful lead email scraper that helps businesses collect targeted contact details from multiple platforms. By entering specific keywords, locations, or industries, the tool scans and extracts emails and phone numbers, allowing you to connect with potential clients quickly and efficiently.

Yes! IGLeads follows all data protection laws, including DMCA and CFAA compliance. We only extract publicly available information, ensuring ethical and legal lead generation practices. Our tool does not use unauthorized access, fake accounts, or violate any platform’s terms of service.

You can extract leads from over 11+ platforms, including LinkedIn, Google Maps, Twitter, Instagram, and B2B directories. Our tool ensures high-quality contact collection across industries, helping you target the right audience with minimal effort.

The number of leads depends on the platform, keywords, and filters you use. However, our advanced system is designed to pull thousands of targeted leads in just a few hours, making prospecting more efficient and scalable.

Not at all! IGLeads is designed for ease of use. With a simple sign-up, intuitive search filters, and one-click data export, anyone can collect high-quality leads without any technical expertise or complicated setups.

Yes! You can download your leads in a structured CSV file, ready for seamless integration into your CRM, email marketing software, or sales pipeline. No messy formatting—just clean, organized data at your fingertips.

Our tool uses intelligent filters and real-time data extraction to pull fresh, high-intent leads. Unlike outdated lead lists, we provide accurate contact details to ensure better response rates and higher conversion potential.

We offer flexible pricing plans based on your needs, whether you’re a small business or an enterprise. Our cost-effective model helps you save on expensive ads and unreliable lead lists while delivering high-quality prospects at scale.

Absolutely! Whether you’re in real estate, e-commerce, marketing, SaaS, or any other industry, our tool can help you find leads tailored to your specific niche. Just enter your criteria, and we’ll do the heavy lifting.

You can start immediately after signing up! Our tool works in real-time, allowing you to extract and download targeted leads in minutes. No waiting, no complicated processes—just instant, high-quality prospecting.