Free vs Paid Google Maps Scrapers: Which One Should You Use?

Google Maps sits on one of the largest untapped goldmines of business data you’re probably not using effectively. With over 200 million businesses and places worldwide, it’s become one of the richest sources of localized information for lead generation, market research, and competitive intelligence.

The numbers tell the story. New York alone has 8,000 restaurants listed on Google Maps. If you tried accessing this data manually, you’d be there for months. Google’s official API isn’t much help either. Sure, they offer $200 in free credits, but that barely covers 28,000 dynamic map loads – hardly enough if you’re serious about data collection at scale.

This is where the free vs paid scraper decision gets interesting.

Free scrapers look appealing on paper. No upfront costs, no contracts, just download and start extracting. But dig deeper and you’ll hit walls fast: slow extraction speeds, incomplete data, and reliability issues that can kill your workflow when you need it most.

Premium scrapers like IGLeads.io have already processed tens of millions of live Google Maps listings worldwide, ensuring every lead you export is accurate, current, and ready for outreach. You won’t waste hours on broken emails or missing phone numbers—the data is clean, compliant, and export-ready.

Here’s what most people don’t realize: scraping tools crush official APIs on cost-effectiveness for high-volume tasks. The flexibility is better too. But the choice between free open-source tools and premium services comes with real trade-offs in reliability, speed, and legal considerations you need to understand.

We’ll break down exactly what you get – and what you give up – with both free and paid Google Maps scrapers in 2025.

Key Evaluation Criteria

When you’re weighing free vs paid Google Maps scrapers, certain factors separate the basic tools from solutions that actually work at scale. Here’s what matters for your data extraction needs.

Data completeness

The gap between basic and complete data extraction is huge. Most scrapers grab standard business info – name, address, phone number. But premium services pull additional data points like histograms for popular times, something you can’t even get from Google’s official Places API. Free options typically give you bare-bones information, while paid tools extract up to 35+ data attributes per listing, including SEO elements and social media links.

If you’re building lead lists, those extra data points make the difference between a contact and a qualified prospect.

Speed & throughput

Speed differences are dramatic. Free scrapers crawl along at 20-30 records per minute. Premium services like Octoparse handle up to 200 businesses per minute at basic speed. The most efficient paid solutions extract data at rates of 120-250+ records per minute, with some offering scalable concurrency for up to 40x faster performance.

When you’re pulling data for entire cities or regions, those speed differences translate to hours vs days.

Limits, quotas & anti-blocking

Free solutions hit Google’s 120-result limit per search fast. Premium options bypass this through smarter techniques:

- Intelligent query splitting and proxy rotation

- Built-in CAPTCHA solving capabilities

- Automated retries and browser fingerprinting

Google’s official API enforces strict quotas – only 50 single-resource read requests per second. Paid scrapers typically offer unlimited requests with intelligent rate limiting to avoid detection.

Ease of use / learning curve

Technical requirements vary wildly. Open-source scrapers demand coding skills and ongoing maintenance. Premium no-code solutions like Octoparse offer point-and-click interfaces with pre-built templates, making them accessible even if you’ve never written a line of code.

For most business users, the learning curve difference is make-or-break.

Pricing & cost per 1,000 records

Paid scrapers typically use three pricing models:

- Pay-per-record: $1-$4 per 1,000 records

- Subscription-based: $40-$120 monthly

- Execution-time pricing: Charging by processing duration rather than results

Many providers offer free tiers ranging from 100-500 records for testing before you commit.

API & developer support

Developer experience varies substantially. Premium services provide detailed API documentation, webhooks for workflow integration, and actual technical support. Free options typically lack structured documentation and offer limited integration capabilities.

If you’re connecting scraped data to other systems, this support matters.

Reliability & error handling

Paid solutions generally achieve 95%+ accuracy rates with built-in error handling and automated retries. Free scrapers require manual intervention when things break, making them unreliable for production environments where downtime costs money.

Legal considerations

Scraping publicly available data is legal according to U.S. court rulings, but scraping violates Google’s Terms of Service. Premium providers implement responsible scraping practices to minimize risks, including proxy rotation and rate limiting. Free tools typically leave you exposed to these risks.

IGLeads.io (Balanced Option for Scalable Lead Gen)

Image Source: IGLeads.io

IGLeads.io sits in an interesting spot. Launched in 2021, this cloud-based platform attempts to bridge the gap between simple scrapers and enterprise-grade solutions for teams who need reliable Google Maps data extraction without the complexity.

Key features

What makes IGLeads different is its multi-platform approach. You can extract data from 11+ platforms simultaneously, which saves time compared to running separate scrapers for each source. The cloud-based system means your computer doesn’t need to stay powered on during scraping jobs. Once your list is ready, they email you the results.

The platform includes several practical features:

- Unlimited email extraction without extra fees

- AI-powered keyword generator for more targeted leads

- One-click CSV exports

- Automated scheduled scraping

- Focuses exclusively on public data for legal compliance

Pricing

IGLeads keeps pricing straightforward with three monthly tiers:

- Starter Plan: $59.00/month for 10,000 emails monthly with 2 scraping seats

- Business Plan: $149.00/month for 50,000 emails monthly with 2 scraping seats

- Unlimited Plan: $299.00/month for unlimited emails with 2 scraping seats

Annual billing offers savings up to 47%. Throughout 2023, customers averaged $58.00 in savings using available coupons.

Pros & cons

| ✅ Pros | ❌ Cons |

| Cloud operation continues even when your computer is off | Google Maps searches yield fewer emails compared to other platforms |

| Multi-platform extraction beyond just Google Maps | 8-hour average scraping time can feel slow for urgent projects |

| No technical skills required | Geographic targeting accuracy has room for improvement |

| Customer support rated 4.4/5 consistently | Higher-tier plans needed for full functionality |

Speed & data limits

IGLeads typically delivers results within 8 hours for any scrape. Speed depends on server load and the volume of profiles being scraped. The platform prioritizes thoroughness over speed—one user noted, “Patience pays… Give each scrape 12 hours and the results are pretty amazing”.

Ideal use cases

IGLeads works well for:

- Email marketing campaigns needing regular list refreshes

- Industry-specific targeting (particularly effective for HVAC and similar sectors)

- Multi-platform lead generation across social media and business directories

- Cold outreach campaigns requiring verified contact information

- Small to mid-size businesses wanting cost-effective lead generation without technical complexity

ScrapeGraphAI (Best AI-Powered Free Google Maps Scraper)

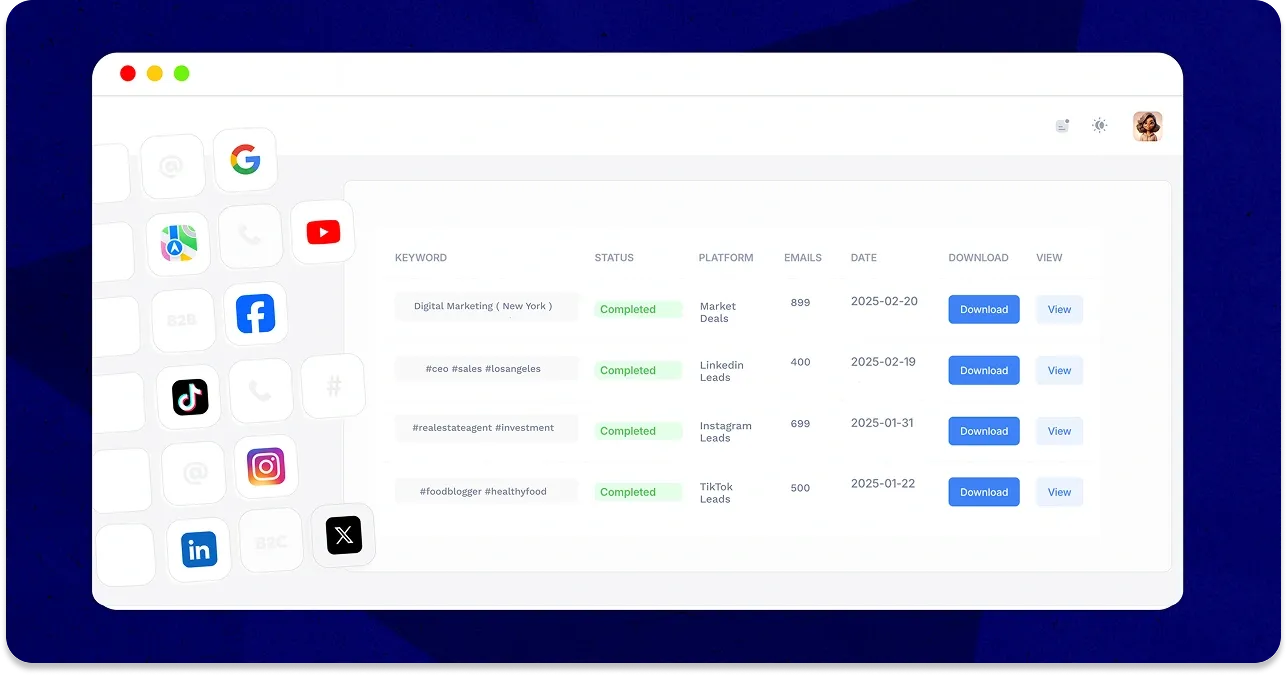

Image Source: ScrapeGraphAI

ScrapeGraphAI takes a different approach to Google Maps scraping. Instead of wrestling with complex code, you describe what you need in plain language and let the AI handle the technical stuff automatically. It’s built on large language models plus graph logic to create scraping pipelines from simple prompts.

For teams tired of maintaining brittle scraping scripts every time Google changes something, this could be interesting.

Key features

The platform offers both open-source library access and a premium API service. What sets it apart:

- AI-powered extraction that adapts to website changes automatically

- Built-in proxy rotation and browser automation

- Multiple scraping pipelines for different data extraction needs

- Programming language support for Python, JavaScript, and others

- AI framework integrations with LangChain, LlamaIndex, and similar tools

The biggest selling point? You don’t need to update your scraper every time Google tweaks their layout.

Pricing

ScrapeGraphAI offers a genuinely free tier that’s more generous than most:

- Free: $0/month with 50 credits and 10 requests per minute

- Starter: $17/month with 60,000 credits per year and 30 requests/minute; Growth: $85/month with 480,000 credits per year and 60 requests/minute

- Pro: $425/month with 3 million credits annually and 200 requests/minute;

- Enterprise: Custom pricing

Each API call includes HTTP requests, JavaScript rendering, proxy rotation, IP management, AI inference, and output formatting. That’s a lot bundled in for the price.

Pros & Cons

| ✅ Pros | ❌ Cons |

| Natural language prompts make setup fast | Fewer integrations than established tools |

| Handles website changes without code updates | Advanced features still need learning |

| Quick setup in minutes | Can be inconsistent on complex sites |

| Built-in production features with auto-recovery | Credit limits get expensive for large jobs |

| Pay-as-you-go pricing model |

Speed & Data limits

Performance depends on your plan:

- Free tier: 10 requests per minute

- Starter: 30 requests per minute with 1 cron job

- Growth: 60 requests per minute with 5 cron jobs

- Pro: 200 requests per minute with 25 cron jobs

The credit system controls your extraction volume. Free users get 50 credits to test, while higher plans offer yearly allocations ranging into the millions.

Ideal use cases

ScrapeGraphAI works well for:

- RAG pipelines: Feed real-time web data into AI models

- Autonomous agents: Let AI systems research and extract data independently

- Real-time data collection: Pull live information for dynamic enrichment

- Small to medium projects: Perfect for researchers or small businesses who want Google Maps data without coding headaches

Bottom line: If you’re new to Google Maps scraping and prefer AI assistance over complex coding, ScrapeGraphAI offers a solid entry point. Just be aware that credit limits can get expensive if you’re running large-scale extractions.

Apify (Most Flexible Paid Google Maps Scraper)

Image Source: Apify

Apify dominates the premium Google Maps scraping market with its cloud-based approach that turns websites into APIs through customizable workflows. This isn’t just another data extractor—it’s a full scraping infrastructure that handles the heavy lifting while you focus on results.

Apify key features

Apify’s Google Maps Scraper comes loaded with capabilities that solve the problems most scrapers can’t handle:

Advanced geographic targeting ⭐⭐⭐⭐⭐ with location names, precise coordinates, radius filtering (1-100 miles), and custom polygon boundaries. No more being stuck with broad, useless searches.

Smart data collection ⭐⭐⭐⭐ featuring systematic area coverage and automatic duplicate removal. The system actually thinks about what you’re trying to accomplish.

Comprehensive business intelligence ⭐⭐⭐⭐⭐ extraction including names, addresses, phone numbers, websites, ratings, hours, and more. You get the full picture, not just basic contact info.

Bypass Google’s 120-result limit ⭐⭐⭐⭐⭐ through custom search areas. This alone makes Apify worth considering if you need serious volume.

Contact enrichment add-on ⭐⭐⭐⭐ scans target websites to find email addresses, phone numbers and social media links.

The platform also splits large maps into smaller, detailed mini-maps at optimal zoom levels (usually 16) to maximize results. Smart engineering that actually works.

Apify pricing

Apify uses a transparent pay-per-event model that’s refreshingly straightforward:

- Base charge of $4.00 per 1,000 places

- Additional charges for filters, contact details, reviews, and images

- Free plan includes $5.00 in monthly platform credits (renews automatically)

- Paid plans start at $39.00/month (Starter), with Scale ($199.00) and Business ($999.00) options

One catch: unused platform credits don’t roll over between billing cycles. Use them or lose them.

Pros & Cons

| ✅ Pros | ❌ Cons |

| Cloud-based infrastructure eliminates local setup hassles | Costs increase rapidly at scale ($4.00 per 1,000 records adds up) |

| Integrates with Zapier, Slack, Make, Airbyte, GitHub, Google Sheets, etc. | Platform lock-in—must run within Apify ecosystem |

| Clean data export in JSON, Excel, or CSV formats | Depends on Apify’s developers for updates when Google changes |

| Up to 2X faster speed with the pay-per-event model | Some users report pricing unpredictability |

Speed & Data limits

Apify’s Google Maps Scraper can extract up to 15,000 results per search with no rate limits or quotas. You can boost run memory up to 8GB per run and execute multiple runs simultaneously for faster processing. The pay-per-event model has reportedly increased speed up to 2X compared to previous versions.

Bottom line: if you need scale and don’t mind paying for performance, Apify delivers.

Ideal use cases

Apify excels for teams that need serious infrastructure:

- Market research & analysis for competitive intelligence and geographic expansion

- Lead generation & sales through targeted prospecting and territory planning

- Real estate & development for site selection and market analysis

- App development needing location services and data enrichment

- Automation of research workflows replacing manual search tasks

If you’re running enterprise-level operations and need reliability over cost savings, Apify handles the complexity so you don’t have to.

Octoparse (Best No-Code Scraper for Beginners)

Image Source: YouTube

Octoparse takes a completely different approach. While other scrapers assume you know how to code, this platform lets you point, click, and scrape without writing a single line of code.

Key features

Octoparse is built for people who want results, not coding headaches. Its visual workflow builder makes Google Maps scraping accessible through:

- Ready-to-use Google Maps templates that work out of the box

- Auto-detection functions that spot locations, store names, phone numbers, and reviews automatically

- 24/7 cloud-based execution so your scrapes run even when your computer is off

- Multiple export formats including CSV, Excel, JSON, XML, plus direct Google Sheets integration

- AI-powered scraping assistant that guides you through workflow creation

The platform handles the technical stuff like IP rotation, pagination, and CAPTCHA solving automatically. You just focus on getting the data you need.

Pricing

Octoparse uses a tiered approach that scales with your needs:

- Free Plan: $0/month with 10 tasks, local execution only, up to 10K data per export (50K monthly limit)

- Standard Plan: $69/month (annual) or $83/month (monthly) with 100 tasks, cloud execution, IP rotation, residential proxies

- Professional Plan: $249/month with 250 tasks, up to 20 concurrent cloud processes, priority support

- Enterprise Plan: Custom pricing with 750+ tasks and 40+ concurrent processes

They also offer pay-per-result templates specifically for Google Maps at $0.20 to $1.50 per 1,000 records.

Pros & Cons

| ✅ Pros | ❌ Cons |

| Truly no-code interface anyone can use | Custom workflows can overwhelm casual users |

| Cloud-based execution runs unattended | Templates occasionally break |

| Export to multiple formats plus Zapier integration | Weak email extraction compared to competitors |

| Pre-built Google Maps templates ready to go | Large scrapes need to be split into smaller chunks |

Speed & Data limits

Performance varies by plan level:

- Cloud-based execution across multiple servers delivers fast extraction speeds

- Standard plan: Up to 3 concurrent processes

- Higher tiers: Scale to 20+ concurrent processes

- Handles both small and large-scale projects with proper configuration

The catch? Free plan caps you at 10K data per export and 50K monthly. Paid plans remove these limits entirely.

Ideal use cases

Octoparse works best for:

- Beginners who need Google Maps data but don’t want to learn coding

- Small businesses requiring lead generation without technical overhead

- Teams that need scheduled, automated business data extraction

- Projects requiring clean data in multiple export formats

- Users focused on basic business information rather than email hunting

Bottom line: If coding intimidates you or complex setups sound like a nightmare, Octoparse offers the most accessible path to Google Maps data extraction.

Scrap.io (Best for Bulk Lead Generation)

Image Source: Scrap.io

Scrap.io cuts straight to what matters: turning Google Maps into a lead generation machine without the technical headaches. If you need to extract massive datasets fast, this tool gets the job done.

Core features

Scrap.io‘s strength is comprehensive data extraction with some serious capabilities that competitors struggle to match:

Real-time data extraction ⭐⭐⭐⭐⭐ from over 200 million businesses across 195 countries. You’re getting current information, not stale database records.

Direct extraction ⭐⭐⭐⭐ from Google Maps and associated websites. The data stays fresh because it’s pulled live, not cached from months ago.

Superior filtering system ⭐⭐⭐⭐ with 17 different filters (competitors like OutScraper only offer 9). More filtering means you pay for qualified leads, not junk data.

Comprehensive data fields ⭐⭐⭐⭐⭐ including emails, social media profiles, and website technologies. It’s not just addresses and phone numbers.

What sets it apart? You can extract data for an entire country in just two clicks. No coding required. The platform processes 5,000 queries per minute, which crushes most alternatives.

Scrap.io pricing breakdown

It keeps pricing transparent across several tiers:

Monthly Plans:

- Basic: €49/month – 10,000 exports, city-level searches

- Professional: €99/month – 20,000 exports, level 2 division searches [282]

- Agency: €199/month – 40,000 exports, level 1 division searches

- Company: €499/month – 100,000 exports, country-wide searches

Yearly plans offer significant discounts (€35-350/month). New users get 100 free leads during a 7-day trial.

Pros & Cons

| ✅ Pros | ❌ Cons |

| Cloud-based solution, no software installation needed | Free plan caps at just 100 leads |

| Advanced filtering before extraction – pay only for qualified leads | Advanced filtering locked behind higher-tier plans |

| Transparent pricing, no surprise costs for large extractions | Geographic targeting limited on lower plans |

| Real-time data ensures zero duplicate contacts | Whole-country extraction only on most expensive tier |

Speed & performance

Scrap.io delivers impressive performance metrics:

- Processes 5,000 queries per minute

- Handles datasets of 140,000+ and 210,000+ results successfully

- Export limits tied to your plan (10,000-100,000 monthly)

- Extracts 1,600+ results per search versus competitors’ 120-result cap

When Scrap.io makes sense

Scrap.io works particularly well for:

- High-volume lead generation across entire geographic regions

- Targeted prospecting using multiple filtering criteria

- Real estate market analysis requiring price range and rating filters

- Social media marketing campaigns needing social profile data

- Companies requiring fresh data rather than outdated database information

Bottom line: Scrap.io is built for scale. If you need massive, current datasets with solid filtering, this tool delivers without the technical complexity of other solutions.

Decodo (Best API-Based Scraper for Developers)

Image Source: Decodo

Decodo positions itself as the developer’s scraper – the tool you reach for when you need clean API access without the bloat. Previously known as Smartproxy, this isn’t another point-and-click solution. It’s built for programmers who want to integrate Google Maps data directly into their applications.

Key features

Decodo’s Web Scraping API pairs a robust scraper with over 125 million residential, mobile, ISP, and datacenter proxies. What sets it apart for developers:

- Built-in SERP scraper optimized specifically for Google Maps

- API playground where you can test requests live, build queries, and generate code snippets

- Ready-made templates with parsing functionality already handled

- Flexible output formats – choose between raw HTML or structured JSON

- JavaScript rendering (Advanced plan only)

- Location targeting across 195+ countries with city-level precision

The API playground alone saves hours of trial-and-error development time.

Pricing

Decodo runs two distinct pricing models:

Core plan: $29 for 90K requests ($0.32/1K) with basic features, scaling to 50M requests at $0.08/1K with volume discounts.

Advanced plan: $29 for 23K requests ($1.25/1K), including JavaScript rendering and worldwide targeting.

Both include a 7-day free trial and 14-day money-back guarantee.

Pros & Cons

| ✅ Pros | ❌ Cons |

| 100% success rate for data extraction | Complex pricing structure can be confusing |

| No setup required with pre-built scrapers | Core plan has location restrictions |

| Award-winning support with solid documentation | Advanced features locked to higher-priced plans |

| Clean UI with practical examples | Users report inflexible pricing concerns |

Speed & Data limits

Performance metrics tell the story:

- 99.99% uptime with sub-0.3s response times

- 30+ requests per second across all plans

- Near 100% success rate scraping Google and similar sites

- Zero CAPTCHA issues thanks to integrated browser fingerprinting

Ideal use cases

Decodo works best for:

- Developers integrating Google Maps data into apps or dashboards

- Web scraping protected sites using residential proxy networks

- SEO tracking and competitor analysis requiring consistent data feeds

- Travel fare aggregation needing location-specific pricing

- Ad verification and market research with geographic targeting requirements

If you’re comfortable with APIs and need reliable, scalable access to Google Maps data, Decodo delivers without the complexity of enterprise solutions.

Pricing & Cost Modeling

How much does a Google Maps scraper cost?

Most paid Google Maps scrapers range from $40 to $300 per month, depending on features, speed, and export volume. Some platforms use pay-per-record pricing ($1–$4 per 1,000 records), while others rely on monthly subscriptions. Free tiers exist, but they’re only meant for testing.

When you compare these prices to Google’s official API, the difference is dramatic. Scraping tools often reduce costs by 90–95%, which explains why businesses prefer them for large-scale lead generation.

Example cost scenarios (5K, 50K records)

Small marketing agency needs 5,000 restaurant contacts monthly? Here’s what you’re actually paying:

| Solution | API/Record Costs | Developer/Infrastructure | Total Monthly |

| Google Maps API | $280.00 | $800.00+ | $1,080.00+ |

| Scraping Tool | $99.00 | Minimal | $99.00 |

Savings: 92% cost reduction

Scale that to 20,000 monthly business records and the gap becomes absurd:

| Solution | API/Record Costs | Infrastructure | Total Monthly |

| Google Maps API | $1,120.00 | $2,500.00+ | $3,620.00+ |

| Scraping Tool | $199.00 | Included | $199.00 |

Savings: 95% cost reduction

This disparity isn’t an accident. Google’s API pricing assumes you’re building the next Uber, not extracting lead lists for your sales team.

Hidden costs & overages

The advertised pricing is just the starting point. Users regularly get hit with unexpected charges after a single scrape burns $30.00 in credits. Common gotchas include:

- Monthly credits that vanish after 30 days whether you use them or not

- “Additional services” charges that appear without warning

- Free tier limits that vary wildly across features (25 units here, 500 there)

- Separate billing for anything outside your base package

Execution-time pricing models are particularly brutal – you pay for the time whether you get useful data or garbage results. Lead-based pricing flips this: you only pay for extracted records, typically saving 60-80% compared to time-based billing.

When evaluating scrapers, always ask: what happens when you need 10x more data next month?

Legal, Ethics & Terms of Service Risks

Image Source: LinkedIn

Google Maps scraping exists in a legal gray area that most tool vendors won’t discuss honestly. Let’s cut through the marketing fluff and examine the real risks you’re facing.

Google’s Terms of Service overview

Google’s ToS explicitly bans scraping. Their terms state clearly: “Google Maps Content cannot be exported, extracted, or otherwise scraped for usage outside the Services”. This prohibition covers:

- External hosting of Maps content

- Bulk downloading of data

- Local storage of business information

But here’s what changes everything: breaking Google’s ToS is a civil contract violation, not criminal law. Google fights scraping to protect their revenue streams and server resources. The law itself doesn’t prohibit accessing publicly available data.

Risk of bans & account issues

So what actually happens when you scrape Google Maps? For standard business data collection, the reality is:

- Most common outcome: Temporary IP bans lasting 15-60 minutes

- Possible escalation: Account suspension if you’re logged into Google services

- Rare scenarios: Cease and desist letters for massive operations

Since most scraping happens without logging into Google accounts, the risk of permanent account deletion stays minimal.

Data privacy & compliance

Beyond Google’s rules, you’re dealing with broader legal frameworks. Unauthorized data collection can trigger:

- Computer Fraud and Abuse Act violations in the US

- GDPR penalties in Europe for personal data extraction

Bottom line for GDPR compliance: stick to business information only. Avoid scraping personal details like customer photos or individual contact info. Collecting business data without consent could still trigger privacy regulations, but the enforcement focus remains on personal data protection.

The legal landscape isn’t black and white, but understanding these boundaries helps you scrape smarter, not recklessly.

Best Practices & Scaling Strategies

Image Source: Medium

Google Maps scraping success isn’t just about picking the right tool. You need smart technical strategies to avoid getting blocked and keep your data pipeline running smoothly.

Proxy rotation & delays

IP rotation is non-negotiable if you want to scrape at any meaningful scale. Stick with the same IP address for too long and Google will ban you—it’s not a matter of if, but when. Proxy servers create a buffer between your scraper and Google, making it much harder for their systems to detect and block your requests.

For location-specific data, look for proxy networks that cover 200+ countries with geo-targeting capabilities. This lets you pull results as if you’re searching from specific cities or regions, which can be crucial for local business data.

Human behavior mimicking works. Add random scrolling, clicks, and strategic pauses between requests. Google’s anti-bot systems are sophisticated—they’re looking for patterns that scream “automated tool”.

Incremental vs full scraping

Don’t try to extract everything at once. Break large geographic areas into smaller, targeted chunks for much better efficiency. Here’s how smart scrapers approach it:

- Daily/weekly updates to track business changes and new listings

- On-demand refreshing when you need fresh data for competitive analysis

- Incremental collection to fill gaps from previous scrapes

This approach saves resources while keeping your data current. Instead of re-scraping entire cities every time, focus on what’s actually changed.

Monitoring & error handling

Most scraping operations fail because people don’t monitor what’s happening. That’s a costly mistake. Build basic error handling into your workflow so failed requests don’t crash your entire operation:

try:

response = requests.get(url, headers=headers)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f'Error fetching page: {e}')

Google changes their site structure regularly. What works perfectly today might break next week. Build your scrapers with flexibility in mind and monitor for sudden drops in success rates.

The Real Performance Breakdown

| Scraper | Best For | Pricing | Free Option | Speed | Key Features | Ease of Use | Data Quality |

| ScrapeGraphAI | AI-Powered Automation | $17-425/month | 50 credits, 10 req/min | 10-200 req/min | AI-powered extraction, Multi-language support, LangChain integration | ★★★★★ | ★★★★☆ |

| Apify | Flexible Workflows | $4.00/1K records | $5 platform credits | 15K results/search | Advanced geo-targeting, Contact enrichment, Cloud-based infrastructure | ★★★☆☆ | ★★★★★ |

| Octoparse | No-Code Solutions | $69-249/month | 10K records/export | 24/7 cloud execution | Ready-to-use templates, Point-and-click interface, Multiple export formats | ★★★★★ | ★★★★☆ |

| Scrap.io | Bulk Lead Generation | €49-499/month | 100 free leads | 5,000 queries/min | 17 filtering options, Real-time extraction, 200M+ business database | ★★★★☆ | ★★★★★ |

| Decodo | Developer API Access | $0.32-1.25/1K | 7-day trial | 30+ req/sec | 125M+ proxies, API playground, JavaScript rendering | ★★★☆☆ | ★★★★★ |

| IGLeads | Multi-Platform Scraping | $59-299/month | Limited trial | 8-hour avg/scrape | 11+ platform support, Unlimited email extraction, AI keyword generator | ★★★★★ | ★★★★☆ |

Looking at this breakdown, a few things become clear fast. If you’re just testing the waters, ScrapeGraphAI’s 50 free credits beat everyone else’s trial offerings. But if you need serious volume, that 10 req/min limit will frustrate you quickly.

Apify wins on pure data quality and flexibility, but you’ll pay for it. That $4.00 per 1K records adds up when you’re pulling 50K+ leads monthly. Scrap.io delivers similar quality with better bulk pricing, especially if you’re willing to commit to higher tiers.

For non-technical users, Octoparse is the clear winner. Point-and-click simplicity without sacrificing much on performance. Decodo sits at the opposite end – powerful for developers who want full control, but expect a steeper learning curve.

IGLeads strikes a solid balance: reliable data quality, transparent pricing, and easy CSV exports. While not the fastest scraper on the market, its focus on accuracy and compliance makes it a dependable choice for businesses that value clean data over raw speed.

Bottom line: Match the tool to your actual workflow, not the marketing promises. Budget-conscious testers should start with ScrapeGraphAI. Serious lead gen operations will outgrow free tiers fast and should evaluate Scrap.io or Apify based on volume needs.

Conclusion & Recommendations

Bottom line: the free vs paid scraper decision comes down to what you’re actually trying to accomplish and how much friction you can tolerate.

ScrapeGraphAI offers the most generous free tier with 50 credits, making it perfect for testing or small-scale projects. But if you’re serious about ongoing data extraction, you’ll hit walls fast. Premium solutions deliver the reliability, speed, and data completeness that actually matter for business use – though you’ll pay $0.20 to $4.00 per thousand records depending on the service.

The cost advantage over Google’s official API remains staggering. We’re talking 92-95% savings at scale, which explains why businesses keep choosing third-party scrapers despite the technical hurdles.

Here’s how to choose:

Non-technical users should stick with Octoparse. The point-and-click interface actually works, and you won’t need to learn coding or mess with configurations.

Developers will get more value from Decodo‘s API access and comprehensive documentation. The 30+ requests per second throughput speaks for itself.

Large-scale operations needing massive datasets should look at Scrap.io. Processing entire countries at 5,000 queries per minute with minimal setup beats wrestling with complex configurations.

Multi-platform prospecting works best with IGLeads – you’re not limited to just Google Maps data, which opens up lead sources most competitors can’t touch.

Yes, Google’s Terms of Service explicitly prohibit scraping. But this is a civil contract issue, not criminal law. Most users face temporary IP bans lasting 15-60 minutes rather than serious legal consequences. Still, implement proper proxy rotation and error handling to minimize detection risks.

The right scraper transforms your business intelligence capabilities without the API price tag. Whether you need lead lists for outreach, competitor monitoring for market research, or location data for app development, these tools unlock value that would otherwise cost thousands monthly through official channels.

Choose based on your technical skills, volume needs, and budget constraints. Then start extracting the data that’s been sitting there waiting for you.

Key Takeaways

-

Massive cost savings: Third-party scrapers deliver up to 95% savings compared to the Google Maps API. For businesses running large-scale data collection (tens of thousands of records per month), this translates into thousands of dollars saved every quarter while still getting access to the same location data.

-

Free scrapers hit walls quickly: Tools like ScrapeGraphAI are excellent for testing or very small projects, but their speed and data caps (10–30 records per minute, 50–100 credits total) are quickly exhausted. Paid solutions remove these bottlenecks, scaling smoothly to 20K+ records per month or more without interruptions.

-

Technical skill matters: Your background determines which scraper is the right fit. Developers will thrive with API-first tools like Decodo, where they can fine-tune queries and integrate with other systems. Non-technical users are better served by no-code platforms like Octoparse, where point-and-click scraping eliminates the need to write scripts or manage proxies manually.

-

Legal risks are manageable: While Google’s Terms of Service prohibit scraping, enforcement typically results in temporary IP bans (15–60 minutes) rather than serious legal consequences. Using proxy rotation, request throttling, and error handling allows most teams to run scrapers safely at scale without major interruptions.

-

Igleads.io stands out as the best long-term option: Unlike single-purpose scrapers limited to Maps, Igleads.io aggregates multiple lead sources (not just location data). This gives businesses a broader pool of prospects across industries, helping with sales outreach, competitive intelligence, and long-term growth. It’s the only platform in this list that lets you build a true multi-channel lead pipeline instead of relying on one data source.

More tools and guides for Google Maps

- Why Scrape Google Maps? Use Cases for Sales, Marketing & SEO

- What is a Google Maps extractor? GMB Scraper, APIs & More

- Is It Legal to Scrape Google Maps? What You Should Know

- How to Scrape Google Maps Emails in 2025: Tools & Guide

- Best 9 Google Maps Email Scrapers 2025

Compare the Best Scrapers Available

- Best 9 YouTube Email Scrapers 2025

- Best 9 Twitter Email Scrapers 2025

- Best 9 TikTok Email Scrapers 2025

- Best 9 Google Maps Email Scrapers 2025

- Best 9 LinkedIn Email Scrapers 2025

- Best 9 Google Email Scrapers 2025

- Best 9 Facebook Email Scrapers 2025

- Best 9 Instagram Email Scrapers 2025

Free vs Paid Scrapers FAQs

Yes. Free tools like ScrapeGraphAI and Octoparse (Free Plan) exist, but they come with strict speed and record limits. They’re best for testing, not large-scale lead generation.

Outscraper offers a limited free trial, but it is not completely free. After the credits run out, you’ll pay per record or via subscription.

Free scrapers typically offer limited features and slower speeds, while paid options provide faster extraction, more comprehensive data, and advanced capabilities like proxy rotation and error handling. Paid scrapers also tend to have better reliability and customer support.

Using a scraper can result in significant cost savings compared to Google’s API. For example, extracting 5,000 records monthly can lead to a 92% cost reduction, while scaling to 20,000 records can save up to 95% on data extraction costs.

While scraping violates Google’s Terms of Service, it’s generally considered a civil rather than criminal issue. The most common consequence is temporary IP bans lasting 15-60 minutes. However, it’s important to implement best practices like proxy rotation to minimize detection risks.

IGLeads.io is one of the top choices for non-technical users thanks to its intuitive interface and no-code workflow. You don’t need programming skills to extract leads—just enter your target location or keyword, and IGLeads.io delivers verified business data in CSV format, ready to upload into your CRM or outreach tool. It’s built for sales reps, marketers, and agencies who want results without the complexity of coding.

To improve scraping efficiency, consider implementing proxy rotation, using incremental scraping techniques, and breaking large geographic areas into smaller targeted sectors. Additionally, monitoring your scraping activities and implementing proper error handling can help maintain consistent performance.